AES加解密(JAVA)

加解密JAVA 实现

引入依赖

1 |

|

代码

1 | import org.slf4j.Logger; |

JAVA 实现1 |

|

1 | import org.slf4j.Logger; |

SM2 是国家密码管理局于2010年12月17日发布的椭圆曲线公钥密码算法。

JAVA 实现1 |

|

1 | import org.slf4j.Logger; |

SM4 是国家密码管理局于2012年3月21日发布的分组密码算法。

JAVA 实现1 |

|

1 | import org.slf4j.Logger; |

etcd 是一个开源的分布式键值存储,用于分布式系统最关键的数据。

它通过将数据复制到多台机器来分布,因此对于单点故障具有很高的可用性。

使用 Raft 共识算法,etcd 优雅地处理网络分区和机器故障,甚至是领导者故障。

etcd 被广泛应用于生产环境:CoreOS、Kubernetes、YouTube Doorman 等。

我这里有

192.168.2.158 etcd-158192.168.2.159 etcd-159192.168.2.160 etcd-160etcd 集群指南CA1 | yum install ntpdate -y |

1 | rm -f ~/etcd-v3.5.4-linux-amd64.tar.gz |

在 host158上执行

root CA 证书1 | #rm -f /opt/cfssl* |

生成

1 | # 创建根证书签名请求文件 |

生成ca 证书和私钥

1 | # 生成 |

结果

1 | # CSR configuration |

1 | # peer |

1 | # 传输到每一个节点 |

在 host158上执行

1 | groupadd etcd && useradd -g etcd etcd && echo '1' | passwd --stdin etcd |

host1581 | rm -rf /opt/etcd/etcd-158 |

host1591 | rm -rf /opt/etcd/etcd-159 |

host1601 | rm -rf /opt/etcd/etcd-160 |

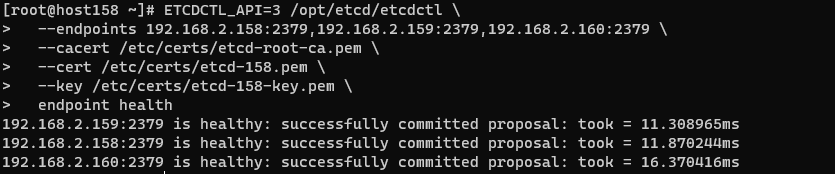

1 | ETCDCTL_API=3 /opt/etcd/etcdctl \ |

注意:三个节点配置结束后,服务才会启动成功

host1581 | rm -rf /opt/etcd/etcd |

host1591 | rm -rf /opt/etcd/etcd |

host1601 | rm -rf /opt/etcd/etcd |

1 | # to get logs from service |

1 | ENDPOINTS=192.168.2.158:2379,192.168.2.159:2379,192.168.2.160:2379 |

1 | export ETCDCTL_API=3 |

注意:需要添加

2

3

4

5

6

ENDPOINTS=192.168.2.158:2379,192.168.2.159:2379,192.168.2.160:2379

--endpoints ${ENDPOINTS} ${ETCD_AUTH}

--cacert /etc/certs/etcd/ca.pem \

--cert /etc/certs/etcd/etcd-158.pem \

--key /etc/certs/etcd/etcd-158-key.pem \

key-value1 | export ETCDCTL_API=3 |

1 | etcdctl --endpoints=${ENDPOINTS} ${ETCD_AUTH} put web1 value1 |

1 | etcdctl --endpoints=${ENDPOINTS} ${ETCD_AUTH} put user1 bad |

keys1 | etcdctl --endpoints=${ENDPOINTS} ${ETCD_AUTH} watch stock1 |

lease1 | etcdctl --endpoints=${ENDPOINTS} ${ETCD_AUTH} lease grant 300 |

locks1 | etcdctl --endpoints=${ENDPOINTS} ${ETCD_AUTH} lock mutex1 |

etcd集群中如何进行leader选举1 | etcdctl --endpoints=${ENDPOINTS} ${ETCD_AUTH} elect one p1 |

1 | etcdctl --endpoints=${ENDPOINTS} ${ETCD_AUTH} endpoint health |

Snapshot can only be requested from one etcd node, so --endpoints flag should contain only one endpoint.

1 | ENDPOINTS=192.168.2.158:2379 |

1 | etcdctl --write-out=table --endpoints=${ENDPOINTS} ${ETCD_AUTH} snapshot status my.db |

1 | export ETCDCTL_API=3 |

对于 ETCD 集群,建议在集群中提供奇数个节点,下表显示了不同的节点数量时 ETCD 集群可以容忍的错误节点数量:

| 集群节点数 | Majority | 最大容错数 |

|---|---|---|

| 1 | 1 | 0 |

| 2 | 2 | 0 |

| 3 | 2 | 1 |

| 4 | 2 | 1 |

| 5 | 3 | 2 |

| 6 | 3 | 2 |

| 7 | 4 | 3 |

| 8 | 4 | 3 |

| 9 | 5 | 4 |

1 | useradd yy |

prometheus 安装:prometheus-2.36.2.windows-amd64.zip

prometheus-2.36.2.linux-amd64.tar.gz

prometheus-web-ui-2.36.2.tar.gz

1 | mkdir ~/tools |

不用修改

1 | cp ~/prometheus/prometheus.yml ~/prometheus/prometheus.yml-bak |

1 | n9e_home=/home/yy/prometheus |

1 | mkdir ~/tools |

1 | # 需要配置其中的 Redis 与 DB |

1 | vim /etc/security/limits.conf |

最后加入

1 | * soft nproc 65535 |

1 | cd /home/yy/n9e |

root/root.2020

Telegraf1 | mkdir ~/tools && cd ~/tools |

1 | cp ~/telegraf/etc/telegraf/telegraf.conf ~/telegraf/etc/telegraf/telegraf.conf-bak |

修改配置项:

1 | cat <<EOF >~/telegraf/etc/telegraf/telegraf.conf |

1 | nohup ~/telegraf/usr/bin/telegraf --config ~/telegraf/etc/telegraf/telegraf.conf &> telegraf.log & |

categraf-v0.2.13-linux-amd64.tar.gz

1 | cd ~/ |

1 | vim ~/categraf/conf/config.toml |

1 | [writer_opt] |

1 | vim ~/categraf/conf/input.mysql/mysql.toml |

1 | cat <<EOF >~/categraf/conf/input.net_response/net_response.toml |

1 | [ |

1 | cat <<EOF >~/categraf/conf/input.redis/redis.toml |

其中,Redis地址需要修改

1 | [ |

1 | nohup ~/categraf/categraf -configs ~/categraf/conf/ &> categraf.log & |

1 | #!/bin/bash |

telegraf采集1 | vim ~/telegraf/etc/telegraf/telegraf.conf |

1 | [[inputs.http_response]] |

1 | [[inputs.net_response]] |

1 | [[inputs.mysql]] |

1 | [[inputs.redis]] |

监控看图-监控大盘-更多操作中选择导入监控大盘-导入内置大盘模块,导入 linux_by_telegraf

更多操作中选择导入监控大盘-导入大盘JSON

1 | { |

1 | { |

1 | redis_active_defrag_running:活动碎片整理是否运行[lw] |

1 |

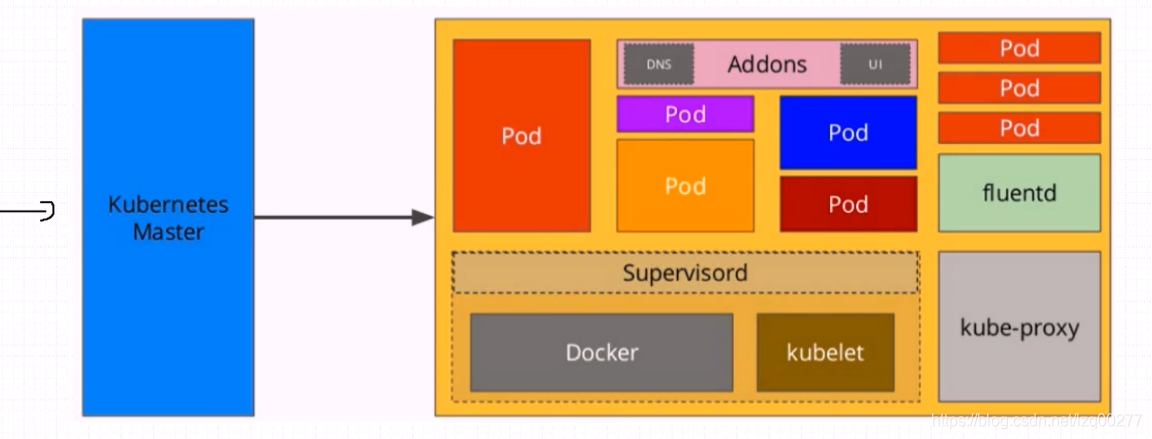

这里使用 kubebetes + containerd.io

dashborad 没有成功

我这里有

192.168.2.158 master-158192.168.2.159 master-159192.168.2.160 master-160192.168.2.161 node-161192.168.2.240 nfs可能需要:

安装

docker或containerd(下文有)

1 | su root |

虚拟机需要挂载

ISO镜像

1 | sudo systemctl stop firewalld |

Docker 镜像1 | # 需要挂载 ISO 镜像 |

k8s 镜像配置:/etc/yum.repos.d/kubernetes.repo

使用阿里云镜像

1 | cat > /etc/yum.repos.d/kubernetes.repo <<EOF |

刷新

1 | # 由于官网未开放同步方式, 可能会有索引gpg检查失败的情况 |

或者使用华为云镜像

1 | cat > /etc/yum.repos.d/kubernetes.repo <<EOF |

Kubeadm 安装万事不决,重启解决,解决不了,删掉重搞

1 | systemctl restart kubelet |

k8s 依赖1 | # 卸载 docker |

kubelet 节点通信kubeadm 自动化部署工具kubectl 集群管理工具ipvs 依赖1 | # 安装 |

kubectl 命令补全工具(可选)1 | sudo yum install -y bash-completion |

kubeadm全部节点

iptables 检查桥接流量1 | # 确保 br_netfilter 模块被加载 |

MAC地址和product_uuid的唯一ip link 或 ifconfig -a 来获取网络接口的 MAC 地址sudo cat /sys/class/dmi/id/product_uuid 命令对 product_uuid 校验全部节点

containerd1 | #关闭swap |

安装 Containerd.io

1 | # 所有节点安装 containerd |

结果:

1 | [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] |

当使用

kubeadm

时,请手动配置kubelet的cgroup驱动

.

CA证书(可以跳过)| 路径 | 默认 CN | 描述 |

|---|---|---|

| ca.crt,key | kubernetes-ca | Kubernetes 通用 CA |

| etcd/ca.crt,key | etcd-ca | 与 etcd 相关的所有功能 |

| front-proxy-ca.crt,key | kubernetes-front-proxy-ca | 用于 前端代理 |

上面的 CA 之外,还需要获取用于服务账户管理的密钥对,也就是 sa.key 和 sa.pub。

下面的例子说明了上表中所示的 CA 密钥和证书文件。

1 | /etc/kubernetes/pki/ca.crt |

etcd集群(含CA)1 | kubeadm config images list |

master 节点(强建议)写入配置

1 | # 写入配置 |

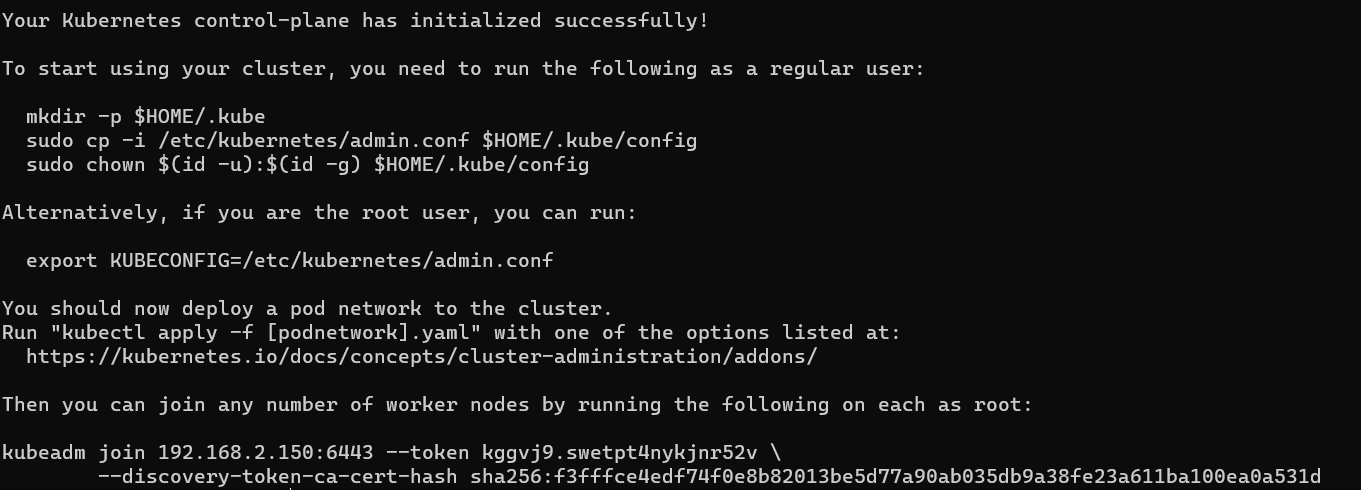

创建

1 | kubeadm init --config=kubeadm-config-init.yaml --v=5 |

master节点(不建议)1 | sudo kubeadm init \ |

kubectl配置1 | # 普通用户 |

host158上生成加入节点的脚本:1 | kubeadm token create --print-join-command |

host159加入证书

1 | # 在 158 上执行 |

加入

1 | # 在159上执行:添加加 master-159 节点 |

配置和校验

1 | mkdir -p $HOME/.kube |

host160加入证书

1 | # 在 158 上执行 |

加入

1 | # 在160上执行:添加 master-160 节点 |

配置和校验

1 | mkdir -p $HOME/.kube |

host161加入1 | # 添加普通节点 |

--experimental-control-plane 作为控制节点加入

在控制节点 host158 上执行:

1 | kubectl get nodes |

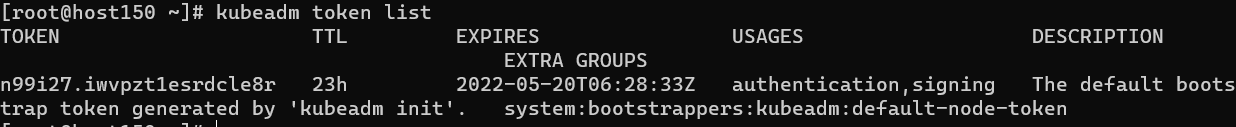

token获取令牌:

1 | kubeadm token list |

创建新令牌

1 | kubeadm token create |

输出类似于以下内容:

1 | 5didvk.d09sbcov8ph2amjw |

直接创建加入脚本

1 | kubeadm token create --print-join-command --ttl=240h |

如果你没有 --discovery-token-ca-cert-hash 的值,则可以通过在控制平面节点上执行以下命令链来获取它:

1 | #默认证书 /etc/kubernetes/pki/ca.crt |

输出类似于以下内容:

1 | 5094a16f108636a64edc65194ef8f61b446f831ebab3265dda5723a394030ee1 |

1 | # 查看证书 |

1 | /etc/kubernetes |

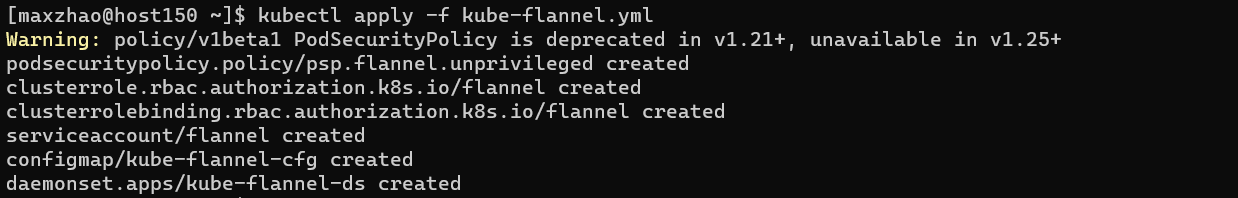

pods网络插件Flannel: 最成熟、最简单的选择(当前选择)Calico: 性能好、灵活性最强,目前的企业级主流Canal: 将Flannel提供的网络层与Calico的网络策略功能集成在一起。Weave: 独有的功能,是对整个网络的简单加密,会增加网络开销Kube-router: kube-router采用lvs实现svc网络,采用bgp实现pod网络.CNI-Genie:CNI-Genie 是一个可以让k8s使用多个cni网络插件的组件,暂时不支持隔离策略k8s的容器虚拟化网络方案大体分为两种: 基于隧道方案和基于路由方案

flannel的 vxlan模式、calico的ipip模式都是隧道模式。flannel的host-gw模式,calico的bgp模式都是路由方案calico 安装(推荐)Fiannel安装在 master上执行

1 | cd ~ |

建议

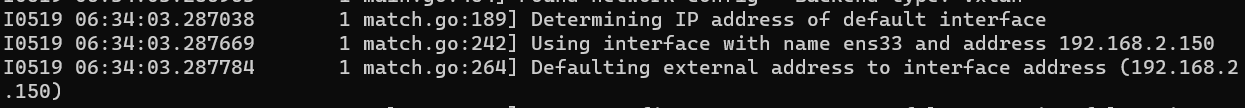

flannel使用Kubernetes API作为其后备存储,这样可以避免为flannel部署离散的etcd集群。

kube-flannel.yml添加网卡

不添加网卡会报错

Failed to find any valid interface to use: failed to get default interface: protocol not available错误:

Failed to find interface

2

Failed to find interface to use that matches the interfaces and/or regexes provided进入容器

2

kubectl -n kube-system exec -it kube-flannel-ds-f92wg bash

修改网段

kubeadm init 时自定义的--pod-network-cidr=10.244.0.0/16 如果有变动,则需要修改 kube-flannel.yml

加载

1 | kubectl apply -f kube-flannel.yml |

查看容器配置

1 | kubectl -n kube-system get ds kube-flannel-ds -o yaml |

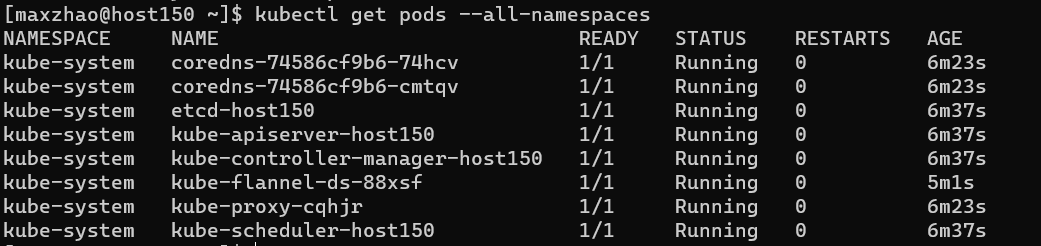

1 | kubectl get pods --all-namespaces |

1 | kubectl logs -n kube-system kube-flannel-ds-88xsf -f |

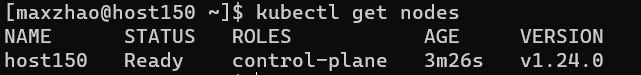

1 | kubectl get nodes |

etcd 集群指南1 | ETCDCTL_API=3 /opt/etcd/etcdctl \ |

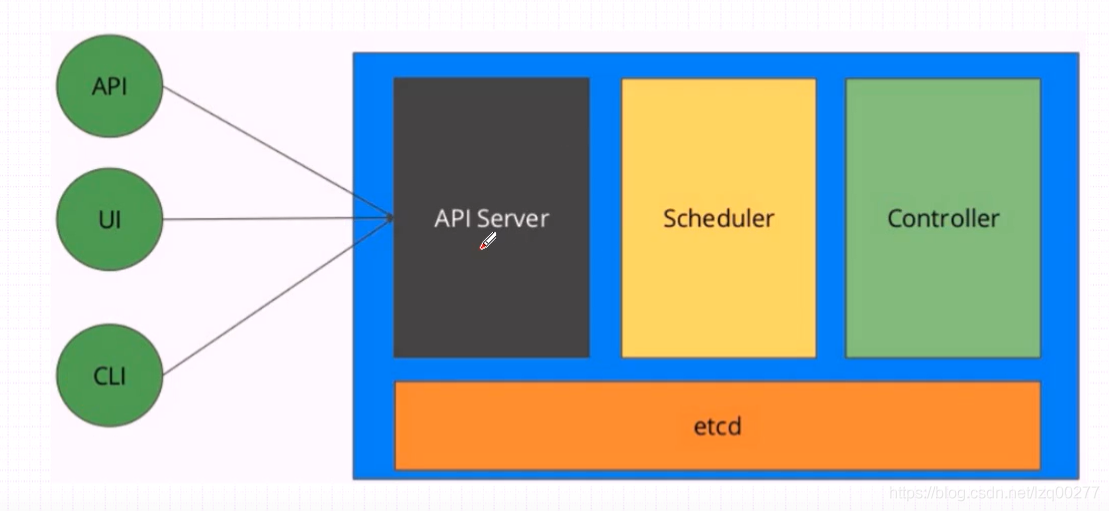

Kubernetes API 服务参数1 | # 服务地址(可以使用负载均衡) |

kubelet由于 etcd 是首先创建的,因此你必须通过创建具有更高优先级的新文件来覆盖 kubeadm 提供的 kubelet 单元文件。

1 | # 原文件 |

https://kubernetes.io/zh/docs/setup/best-practices/certificates/)

1 | cd ~ |

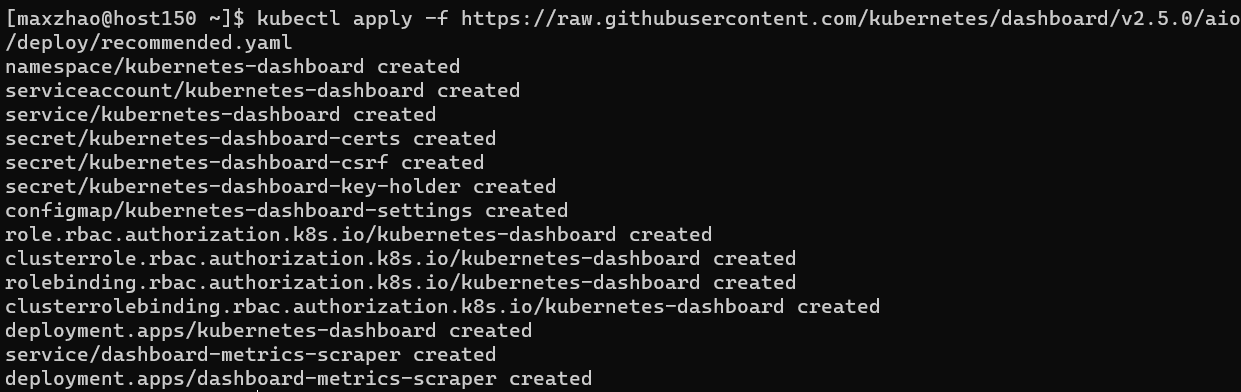

1 | kubectl get pods --all-namespaces |

启用 Dashboard 访问

1 | kubectl proxy |

1 | vim ~/dashboard-admin.yaml |

写入

1 | apiVersion: v1 |

为用户分配权限

1 | vim ~/dashboard-admin-bind-cluster-role.yaml |

写入

1 | apiVersion: rbac.authorization.k8s.io/v1 |

分配权限

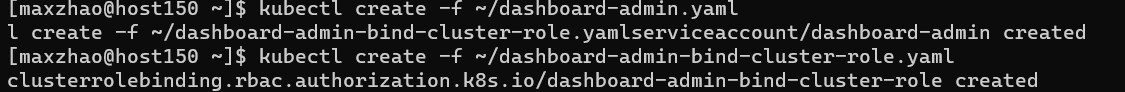

1 | kubectl create -f ~/dashboard-admin.yaml |

查看并复制Token

1 | kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep dashboard-admin | awk '{print $1}') |

访问,用刚刚的token登录

在Kubernetes集群中部署一个Nginx:

kubectl create deployment nginx –image=nginx

kubectl expose deployment nginx –port=80 –type=NodePort

kubectl get pod,svc

访问地址:http://NodeIP:Port

在Kubernetes集群中部署一个Tomcat:

kubectl create deployment tomcat –image=tomcat

kubectl expose deployment tomcat –port=8080 –type=NodePort

访问地址:http://NodeIP:Port

K8s部署微服务(springboot程序)

1、项目打包(jar、war)–>可以采用一些工具git、maven、jenkins

2、制作Dockerfile文件,生成镜像;

3、kubectl create deployment nginx –image= 你的镜像

4、你的springboot就部署好了,是以docker容器的方式运行在pod里面的;

1 | # 删除对集群的本地引用,集群名称 k8s-cluster |

删除 calico

1 | # 删除 cni |

使用适当的凭证与控制平面节点通信,运行:

1 | kubectl drain <node name> --delete-emptydir-data --force --ignore-daemonsets |

在删除节点之前,请重置 kubeadm 安装的状态:

1 | echo "y" | kubeadm reset |

重置过程不会重置或清除 iptables 规则或 IPVS 表。如果你希望重置 iptables,则必须手动进行:

1 | iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X |

如果要重置 IPVS 表,则必须运行以下命令:

1 | ipvsadm -C |

现在删除节点:

1 | kubectl delete node <node name> |

如果你想重新开始,只需运行 kubeadm init 或 kubeadm join 并加上适当的参数。

Containerd.io1 | sudo yum remove -y containerd.io |

Kubernetes容器运行时弃用Docker转型Containerd

Kubernetes02:容器运行时:Docker or Containerd如何选择、Containerd全面上手实践

CentOS7镜像1 | # 备份 |

Docker 虚拟机需要挂载

ISO镜像

1 | # 需要挂载 ISO 镜像 |

1 | # step 1: 安装必要的一些系统工具 |

Containerd 镜像 1 | vim /etc/containerd/config.toml |

找到 [plugins."io.containerd.grpc.v1.cri".registry.mirrors]

写入

1 | [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] |

Kubelet启动失败问题1 | journalctl -xefu kubelet |

pod/kube-proxy CrashLoopBackOff查看 ipvs安装步骤。

1 | #确定检测本地流量的方式,默认为 LocalModeClusterCIDR |

could not find a JWS signature in the cluster-info ConfigMap1 | # kube config 命令查看 cluster-info |

join 时的 JWS 问题1 | The cluster-info ConfigMap does not yet contain a JWS signature for token ID "j2lxkq", will try again |

这里 kubeadm token list 可以看到 token 都很正常。

cluster info中的 JWS 需要在kube-controller-manager运行后创建。

1 | kubectl get pods -A |

1 | # 查看 |

NotReady1 | kubectl describe nodes master-160 |

cni plugin not initialized1 | sudo systemctl restart containerd |

failed to \"CreatePodSandbox\" for \"coredns1 | no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/\"" |

是因为calico 没有启动成功

1 | # 查看 Node状态 |

Docker or Containerdkubelet 通过 Container Runtime Interface (CRI) 与容器运行时交互,以管理镜像和容器。

通用的容器运行时:

kubelet --> docker shim (在 kubelet 进程中) --> dockerd --> containerdkubelet --> cri plugin(在 containerd 进程中) --> containerd| 对比项 | Docker | Containerd |

|---|---|---|

| 谁负责调用 CNI | Kubelet 内部的 docker-shim | Containerd 内置的 cri-plugin(containerd 1.1 以后) |

| 如何配置 CNI | Kubelet 参数 --cni-bin-dir 和 --cni-conf-dir |

Containerd 配置文件(toml): [plugins.cri.cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d" |

| 对比项 | Docker | Containerd |

|---|---|---|

| 存储路径 | 如果 Docker 作为 K8S 容器运行时,容器日志的落盘将由 docker 来完成,保存在类似/var/lib/docker/containers/$CONTAINERID 目录下。Kubelet 会在 /var/log/pods 和 /var/log/containers 下面建立软链接,指向 /var/lib/docker/containers/$CONTAINERID 该目录下的容器日志文件。 |

如果 Containerd 作为 K8S 容器运行时, 容器日志的落盘由 Kubelet 来完成,保存至 /var/log/pods/$CONTAINER_NAME 目录下,同时在 /var/log/containers 目录下创建软链接,指向日志文件。 |

| 配置参数 | 在 docker 配置文件中指定: "log-driver": "json-file", "log-opts": {"max-size": "100m","max-file": "5"} |

方法一:在 kubelet 参数中指定: --container-log-max-files=5--container-log-max-size="100Mi" 方法二:在 KubeletConfiguration 中指定: "containerLogMaxSize": "100Mi", "containerLogMaxFiles": 5, |

| 把容器日志保存到数据盘 | 把数据盘挂载到 “data-root”(缺省是 /var/lib/docker)即可。 |

创建一个软链接 /var/log/pods 指向数据盘挂载点下的某个目录。 在 TKE 中选择“将容器和镜像存储在数据盘”,会自动创建软链接 /var/log/pods。 |

kubenetes不支持docker了

Containerd 不支持 docker API 和 docker CLI,但是可以通过 cri-tool 命令实现类似的功能。

当您遇到以下情况时,请选择 docker 作为运行时组件:

docker in docker。TKE 节点使用 docker build/push/save/load 等命令。docker API。docker compose 或 docker swarm。Docker与 Containerd常用命令| 镜像相关功能 | Docker | Containerd |

|---|---|---|

| 显示本地镜像列表 | docker images | crictl images |

| 下载镜像 | docker pull | crictl pull |

| 上传镜像 | docker push | 无 |

| 删除本地镜像 | docker rmi | crictl rmi |

| 查看镜像详情 | docker inspect IMAGE-ID | crictl inspect IMAGE-ID |

| 容器相关功能 | Docker | Containerd |

|---|---|---|

| 显示容器列表 | docker ps | crictl ps |

| 创建容器 | docker create | crictl create |

| 启动容器 | docker start | crictl start |

| 停止容器 | docker stop | crictl stop |

| 删除容器 | docker rm | crictl rm |

| 查看容器详情 | docker inspect | crictl inspect |

| attach | docker attach | crictl attach |

| exec | docker exec | crictl exec |

| logs | docker logs | crictl logs |

| stats | docker stats | crictl stats |

| POD 相关功能 | Docker | Containerd |

|---|---|---|

| 显示 POD 列表 | 无 | crictl pods |

| 查看 POD 详情 | 无 | crictl inspectp |

| 运行 POD | 无 | crictl runp |

| 停止 POD | 无 | crictl stopp |

dashborad 没有成功

我这里有

192.168.222.150 master192.168.222.151 node192.168.222.152 node

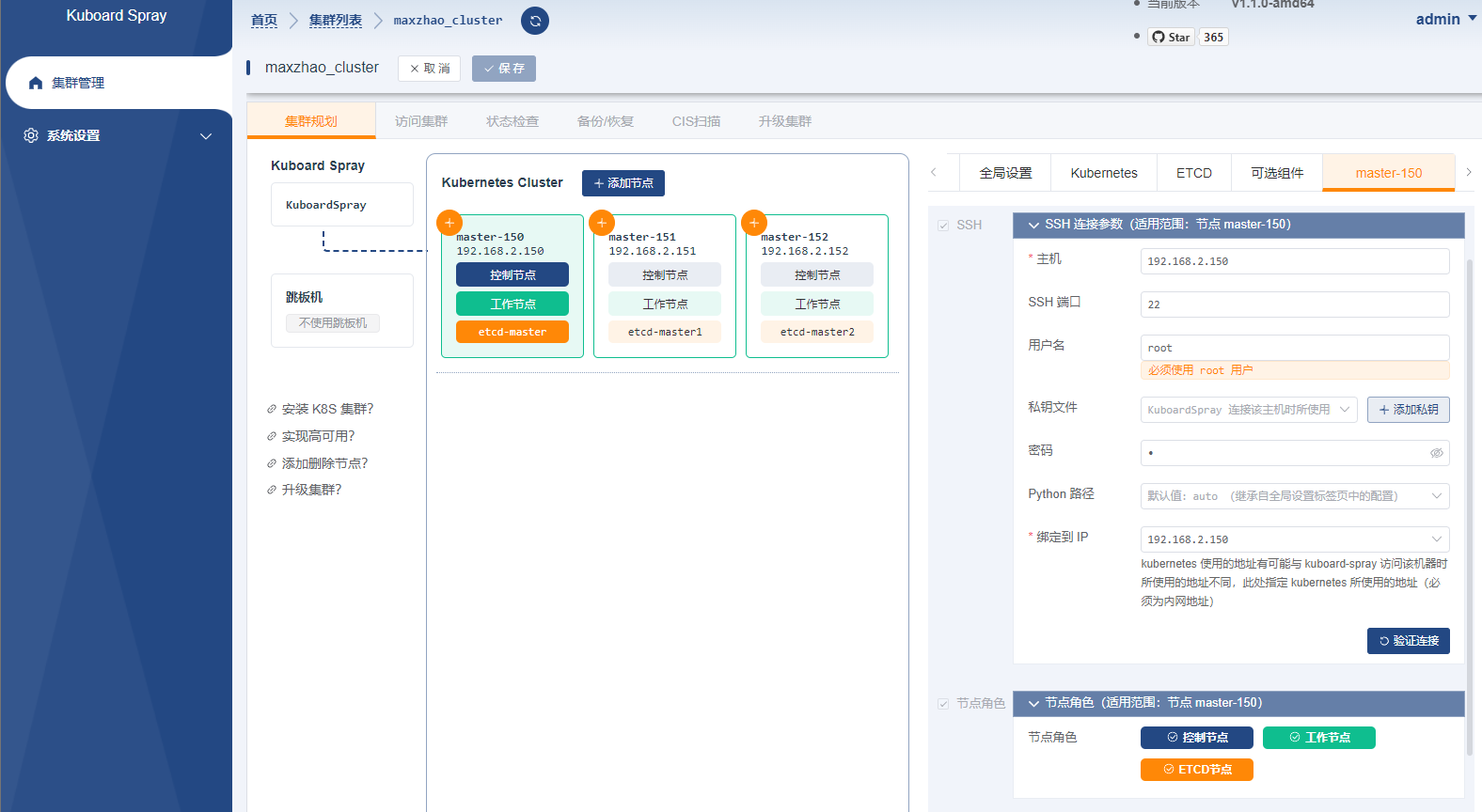

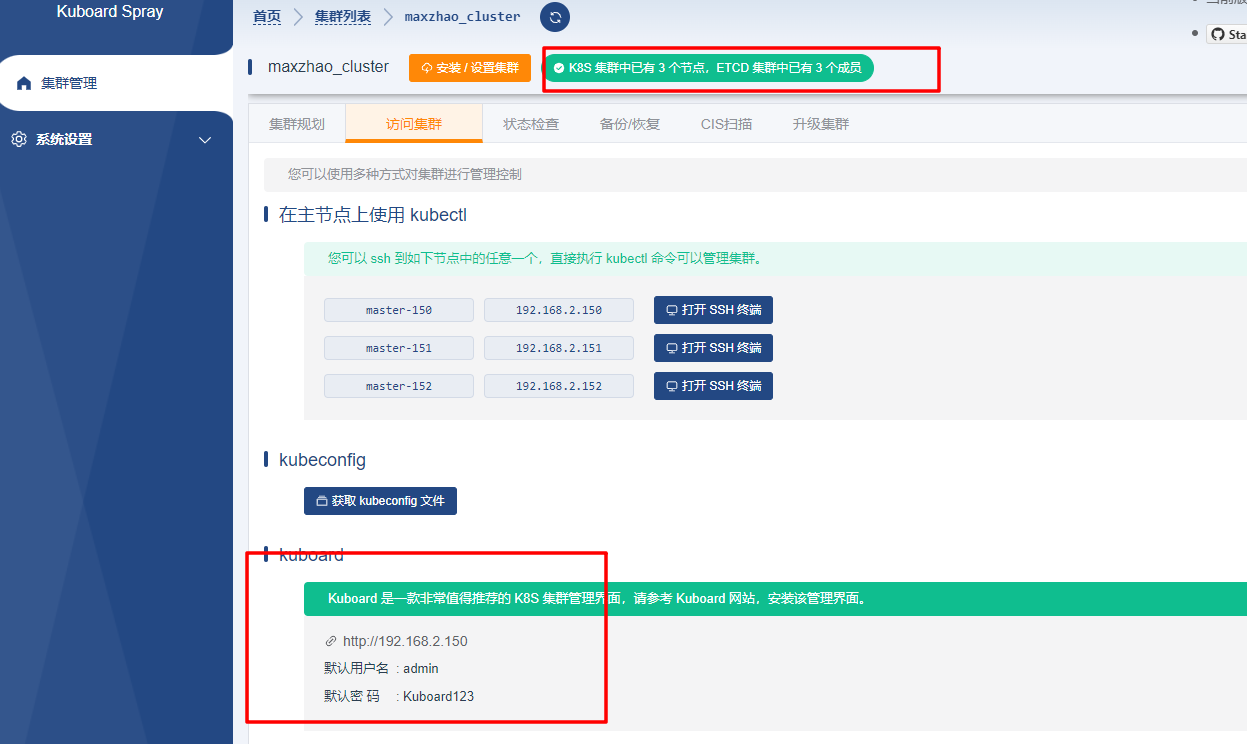

Kuboard安装方式这里只做记录:点击参考官方文档

这里需要:

192.168.222.150 k8s master192.168.222.151 k8s node192.168.222.152 k8s node192.168.222.251 Docker 安装 Kuboard-Spray ,k8s不能安装这台设备上注意:如果是虚拟机,必须是固定

IP

kubelet节点通信kubectl集群管理工具

Kuboard-Spray1 | sudo systemctl stop firewalld |

1 | docker run -d \ |

http://192.168.222.251,输入用户名 admin,默认密码 Kuboard123,即可登录 Kuboard-Spray 界面

系统设置 –> 资源包管理 界面,找到最新版本(需要的版本点击导入),点击标题中的加载xxx操作。

离线导入请参考官方文档

集群管理 界面,点击 添加集群安装计划 按钮,填写集群名称和资源包:

注意事项

最少的节点数量是 1 个;

ETCD 节点、控制节点的总数量必须为奇数;

在 全局设置 标签页,可以设置节点的通用连接参数,例如所有的节点都使用相同的 ssh 端口、用户名、密码,则共同的参数只在此处设置即可;

在节点标签页,如果该节点的角色包含 etcd 则必须填写 ETCD 成员名称 这个字段;

如果您 KuboardSpray 所在节点不能直接访问到 Kubernetes 集群的节点,您可以设置跳板机参数,使 KuboardSpray 可以通过 ssh 访问集群节点。

集群安装过程中,除了已经导入的资源包以外,还需要使用 yum 或 apt 指令安装一些系统软件,例如 curl, rsync, ipvadm, ipset, ethtool 等,此时要用到操作系统的 apt 软件源或者 yum 软件源。全局设置标签页中,可以引导您完成 apt / yum 软件源的设置,您可以:

如果您使用 docker 作为集群的容器引擎,还需要在全局设置标签页指定安装 docker 用的 apt / yum 源。

如果您使用 containerd 作为容器引擎,则无需配置 docker 的 apt / yum 源,containerd 的安装包已经包含在 KuboardSpray 离线资源包中。

只有一个 aliyun的CentOS源的路径是新的,其它在添加时都能找到。

强烈建议配在操作系统里。镜像源使用操作系统默认参考配置

注意:虚拟机需要挂载

ISO镜像mount /dev/cdrom /mnt/cdrom否则可能会报错

建议默认配置,镜像源使用操作系统默认参考配置

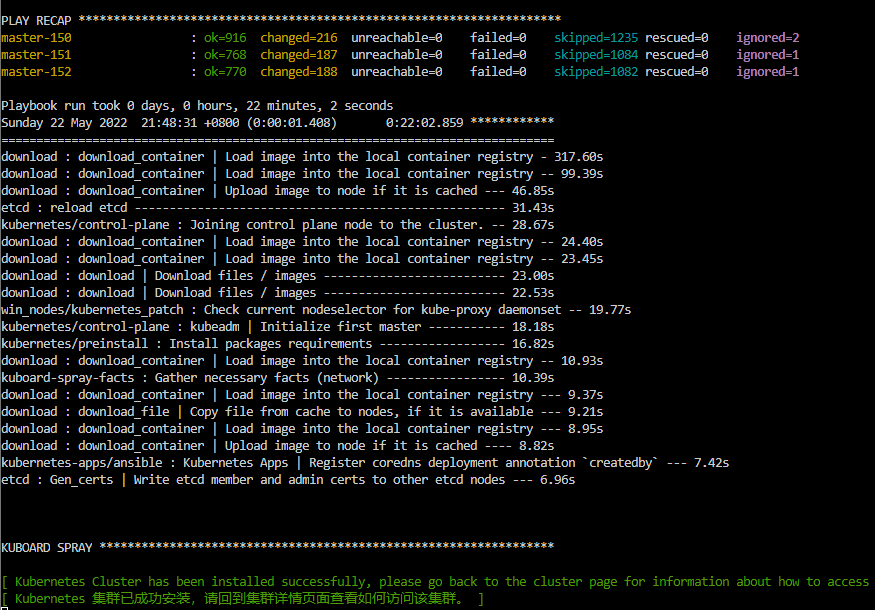

点击保存,然后点击安装/设置集群

界面给出了三种方式可以访问 kubernetes 集群:

kubectl 命令.kubeconfig 文件kuboard管理界面

也可以安装 portainer.io

k8s管理工具这里使用:poerainer

安装在 192.168.222.150

StorageClass 1 | kubectl get sc |

如果是 No resources found 则需要创建一个。

StorageClasshttps://blog.csdn.net/huwh_/article/details/96016423

https://kubernetes.io/docs/concepts/storage/storage-classes/

https://docs.portainer.io/start/install/server/kubernetes/baremetal

StorageClass为默认值1 | kubectl patch storageclass maxzhao-cluster-storage-class -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}' |

deploy use yaml manifests1 | # 社区版 |

也可以使用

portainer的helm

2

3

4

helm repo update

# 社区版

helm install --create-namespace -n portainer portainer portainer/portainer --set tls.force=true

https://localhost:30779/ or http://localhost:30777/

请参考Kuboard中的介绍

Worker节点不能可能是IP变化引起的,需要固定IP后重装集群。

Crash或不能正常访问1 | kubectl get pods --all-namespaces |

重启后会发现许多 Pod 不在 Running 状态,此时,请使用如下命令删除这些状态不正常的 Pod。通常,您的 Pod 如果是使用 Deployment、StatefulSet 等控制器创建的,kubernetes 将创建新的 Pod 作为替代,重新启动的 Pod 通常能够正常工作。

1 | kubectl delete pod <pod-name> -n <pod-namespece> |

1 | sudo systemctl stop firewalld |

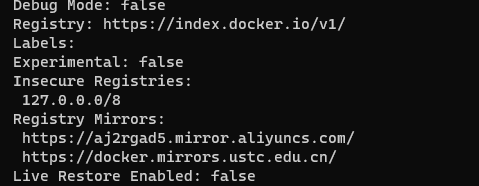

Docker镜像1 | sudo vim /etc/docker/daemon.json |

改为:

1 | { |

远程访问:"hosts": ["unix:///var/run/docker.sock", "tcp://127.0.0.1:2375"]

k8s 镜像1 | sudo vim /etc/yum.repos.d/kubernetes.repo |

写入

1 | [kubernetes] |

刷新

1 | # 由于官网未开放同步方式, 可能会有索引gpg检查失败的情况 |

华为云镜像

1 | [kubernetes] |

kubectl config delete-cluster 删除对集群的本地引用。

使用适当的凭证与控制平面节点通信,运行:

1 | kubectl drain <node name> --delete-emptydir-data --force --ignore-daemonsets |

在删除节点之前,请重置 kubeadm 安装的状态:

1 | kubeadm reset |

重置过程不会重置或清除 iptables 规则或 IPVS 表。如果你希望重置 iptables,则必须手动进行:

1 | iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X |

如果要重置 IPVS 表,则必须运行以下命令:

1 | ipvsadm -C |

现在删除节点:

1 | kubectl delete node <node name> |

如果你想重新开始,只需运行 kubeadm init 或 kubeadm join 并加上适当的参数。

CentOS7镜像1 | # 备份 |

Docker1 | # 需要挂载 ISO 镜像 |

1 | # step 1: 安装必要的一些系统工具 |

Docker镜像加速1 | mkdir /etc/docker |

写入

1 | { |

Spray安装后使用脚本可以使用任意一个地址

1 | # Docker中国 mirror |

1 | docker info |

StorageClass 为管理员提供了一种描述他们提供的存储Class的方法。不同的类可能映射到服务质量级别、备份策略或集群管理员确定的任意策略。 Kubernetes 本身对类代表什么没有意见。这个概念有时在其他存储系统中称为profiles。

每个 StorageClass 都包含字段 provisioner、parameters 和 reclaimPolicy,当需要动态配置属于该类的 PersistentVolume 时使用这些字段。

StorageClass 对象的名称很重要,它是用户请求特定类的方式。管理员在第一次创建StorageClass对象时设置类的名称和其他参数,对象一旦创建就不能更新。

管理员只能为不请求绑定任何特定类的 PVC 指定默认 StorageClass:有关详细信息,请参阅 PersistentVolumeClaim 部分。

1 | apiVersion: storage.k8s.io/v1 |

Provisioner分配器每个StorageClass都有一个provisioner,决定使用什么卷插件来配置PV。这个字段必须被指定。

分配器分为:内部分配器、外部分配器

Reclaim Policy回收策略由 StorageClass 动态创建的 PersistentVolume 将具有在类的 reclaimPolicy 字段中指定的回收策略,该字段可以是 Delete 或 Retain。如果在创建 StorageClass 对象时未指定 reclaimPolicy,则默认为 Delete。

手动创建并通过 StorageClass 管理的 PersistentVolume 将具有在创建时分配的任何回收策略

Allow Volume Expansion允许卷扩展PersistentVolume 可以配置为可扩展。此功能设置为 true 时,允许用户通过编辑相应的 PVC 对象来调整卷的大小。

Mount Options挂载由 StorageClass 动态创建的 PersistentVolume 将具有在类的 mountOptions 字段中指定的挂载选项。

如果卷插件不支持挂载选项但指定了挂载选项,则配置将失败。挂载选项未在类或 PV 上验证。如果挂载选项无效,则 PV 挂载失败

Volume Binding ModevolumeBindingMode 字段控制何时应该发生卷绑定和动态配置。未设置时,默认使用“立即”模式。

即时模式表示一旦创建 PersistentVolumeClaim,就会发生卷绑定和动态供应。对于拓扑受限且无法从集群中的所有节点全局访问的存储后端,将在不知道 Pod 调度要求的情况下绑定或配置 PersistentVolume。这可能会导致不可调度的 Pod。

当集群操作员指定 WaitForFirstConsumer 卷绑定模式时,在大多数情况下不再需要将配置限制为特定拓扑。但是,如果仍然需要,可以指定 allowedTopologies。

此示例演示如何将已配置卷的拓扑限制到特定区域,并且应该用作受支持插件的区域和区域参数的替代。

Parameters存储类具有描述属于该存储类的卷的参数。根据 provisioner 的不同,可以接受不同的参数。例如,参数类型的值 io1 和参数 iopsPerGB 特定于 EBS。当省略参数时,使用一些默认值。

一个 StorageClass 最多可以定义 512 个参数。参数对象的总长度(包括其键和值)不能超过 256 KiB

NFSexample-nfs-storage-class.yaml

1 | apiVersion: storage.k8s.io/v1 |

server:Server 是 NFS 服务器的主机名或 IP 地址。path :NFS服务器导出的路径。readOnly:指示存储是否将被安装为只读的标志(默认为 false)。Kubernetes 不包含内部 NFS 供应商。您需要使用外部供应商为 NFS 创建 StorageClass。这里有些例子:

pvcnfs-pvc.yaml

1 | apiVersion: v1 |

nfs-pvctest-deployment.yaml

1 | apiVersion: apps/v1 |

1 | kubectl apply -f test-deployment.yaml |

一般情况下 nginx 是代理七层的 http 协议,其实 nginx 也可以代理第四层协议

修改配置

1 | http {} |

连接命令

1 | ssh root@192.168.7.173 -p 50022 |

转发请求头

1 | location /prod-api/ { |

安装工具

1 | sudo yum install -y ntpdate |

同步时间

1 | sudo ntpdate -u ntp.api.bz |

常用服务

ntp常用服务器:

210.72.145.44ntp.api.bztime.nist.govntp.fudan.edu.cntime.windows.coms1a.time.edu.cns1b.time.edu.cns1c.time.edu.cnasia.pool.ntp.org查看时区

1 | date -R |

修改时区

1 | tzselect |