前言

安装好docker

这里只做笔记,官网文档介绍很全面

官网文档

我这里有

192.168.222.180 master192.168.222.181 master192.168.222.182 master192.168.222.185 node192.168.222.186 node192.168.222.240 nfs

Docker单节点

1

2

3

4

5

6

| docker run -d --privileged --restart=unless-stopped \

-p 80:80 -p 443:443 \

-v /var/lib/rancher:/var/lib/rancher \

rancher/rancher:latest

docker ps

|

注意:如果是虚拟机,必须是固定IP

设置密码:登录界面会提示获取密码脚本

1

| docker logs container-id 2>&1 | grep "Bootstrap Password:"

|

高可用Rancher

1、安装RKE

1

2

3

4

5

6

7

8

9

10

11

12

13

|

cd ~/

curl https://github.com/rancher/rke/releases/download/v1.3.11/rke_linux-amd64 -o

mv rke_linux-amd64 rke

chmod +x rke

mv rke /usr/local/bin/

rke --version

scp /usr/local/bin/rke root@192.168.222.181:/usr/local/bin/

scp /usr/local/bin/rke root@192.168.222.182:/usr/local/bin/

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.222.180

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.222.181

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.222.182

|

GitHub RKE发布页面

国内资源

操作

2、安装 kubectl

配置镜像后,所有节点都要安装

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

| sudo yum install -y kubectl kubelet

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

lsmod | grep br_netfilter

sudo modprobe overlay

sudo modprobe br_netfilter

echo "1" > /proc/sys/net/bridge/bridge-nf-call-iptables

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

lsmod | grep br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

sudo sysctl --system

|

cluster.yml示例

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

| nodes:

- address: 192.168.222.180

internal_address: 192.168.222.180

user: docker

role: [controlplane, worker, etcd]

hostname_override: host180

- address: 192.168.222.181

internal_address: 192.168.222.181

user: docker

role: [controlplane, worker, etcd]

hostname_override: host181

- address: 192.168.222.182

internal_address: 192.168.222.182

user: docker

role: [controlplane, worker, etcd]

hostname_override: host182

services:

etcd:

snapshot: true

creation: 6h

retention: 24h

ingress:

provider: nginx

options:

use-forwarded-headers: "true"

|

1

2

3

4

5

6

|

rke up --config cluster.yml

rke remove --config cluster.yml

rke up --update-only --config cluster.yml

|

4、安装helm

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| cd ~/

curl https://get.helm.sh/helm-v3.9.0-linux-amd64.tar.gz -o helm-v3.9.0-linux-amd64.tar.gz

tar -zxvf helm-v3.9.0-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm

helm repo add bitnami https://charts.bitnami.com/bitnami

helm search repo bitnami

helm repo add rancher-latest http://rancher-mirror.oss-cn-beijing.aliyuncs.com/server-charts/latest

helm repo add rancher-stable http://rancher-mirror.oss-cn-beijing.aliyuncs.com/server-charts/stable

helm repo list

helm repo update

helm install bitnami/mysql --generate-name

|

helm releases

快速入门指南

5、安装 Rancher

我这里是从其它Rancher安装的集群,需要配置kubeconfig:

在集群管理-选择集群-更多-Download KubeConfig

所有节点配置

```sh

mkdir -p ~/.kube

cat > ~/.kube/config << EOF

apiVersion: v1

kind: Config

clusters:

- name: “ctmd”

cluster:

server: “https://192.168.222.251/k8s/clusters/c-gwdd5"

certificate-authority-data: “LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUJwekNDQ

VUyZ0F3SUJBZ0lCQURBS0JnZ3Foa2pPUFFRREFqQTdNUnd3R2dZRFZRUUtFeE5rZVc1aGJXbGoKY

kdsemRHVnVaWEl0YjNKbk1Sc3dHUVlEVlFRREV4SmtlVzVoYldsamJHbHpkR1Z1WlhJdFkyRXdIa

GNOTWpJdwpOakE0TURZd01qQTRXaGNOTXpJd05qQTFNRFl3TWpBNFdqQTdNUnd3R2dZRFZRUUtFe

E5rZVc1aGJXbGpiR2x6CmRHVnVaWEl0YjNKbk1Sc3dHUVlEVlFRREV4SmtlVzVoYldsamJHbHpkR

1Z1WlhJdFkyRXdXVEFUQmdjcWhrak8KUFFJQkJnZ3Foa2pPUFFNQkJ3TkNBQVJUNHhxcGNQM0ZKV

nhKYW5HZjRIVTJLbUFWRkJuTUc2YUZjbFFVWitDdgo4UEx2R0FMTDFsWTkveGVFeFhQMHNRQjdzc

UVINDFSSzhqSHBwcG1laVFRd28wSXdRREFPQmdOVkhROEJBZjhFCkJBTUNBcVF3RHdZRFZSMFRBU

UgvQkFVd0F3RUIvekFkQmdOVkhRNEVGZ1FVWGJNQ0VmNHVIRXZFUnNwUTJ3N2QKK3pjVWRYY3dDZ

1lJS29aSXpqMEVBd0lEU0FBd1JRSWhBTFhuZDhFNzNWU080UnFoeElpZHJ3YWxjMk5iYW03bQpwR

1hjdUl6MW9YNVRBaUEvRVZZMWwrT1JSRzRCK0JCUEc4UTlzd1dsVzljdndDVk1ydENlb2p1VTBRP

T0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQ==”

users:

- name: “ctmd”

user:

token: “kubeconfig-user-6x5rds8w65:tkpcjwlpvhv9qrsvp8djbtb9qwwfqfh9hdtpb24hzkf9bjb6m2mwqw”

contexts:

- name: “ctmd”

context:

user: “ctmd”

cluster: “ctmd”

current-context: “ctmd”

EOF1

2

3

4

5

6

7

|

```sh

kubectl create namespace cattle-system

|

系统配置

关闭防火墙

1

2

3

4

| sudo systemctl stop firewalld

sudo systemctl disable firewalld

setenforce 0

|

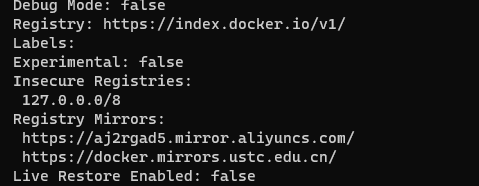

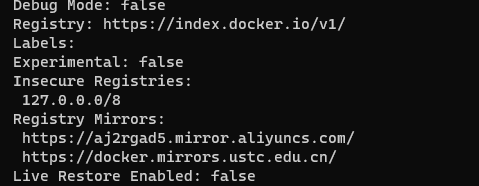

Docker镜像

1

| sudo vim /etc/docker/daemon.json

|

改为:

1

2

3

4

5

| {

"registry-mirrors": ["https://aj2rgad5.mirror.aliyuncs.com","https://docker.mirrors.ustc.edu.cn"],

"dns": ["8.8.8.8", "8.8.4.4"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

|

远程访问:"hosts": ["unix:///var/run/docker.sock", "tcp://127.0.0.1:2375"]

配置 k8s 镜像

1

| sudo vim /etc/yum.repos.d/kubernetes.repo

|

写入

1

2

3

4

5

6

7

| [kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

|

刷新

华为云镜像

1

2

3

4

5

6

7

8

| [kubernetes]

name=Kubernetes

baseurl=https://repo.huaweicloud.com/kubernetes/yum/repos/kubernetes-el7-$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://repo.huaweicloud.com/kubernetes/yum/doc/yum-key.gpg

https://repo.huaweicloud.com/kubernetes/yum/doc/rpm-package-key.gpg

|

卸载集群

kubectl config delete-cluster 删除对集群的本地引用。

删除节点

使用适当的凭证与控制平面节点通信,运行:

1

| kubectl drain <node name> --delete-emptydir-data --force --ignore-daemonsets

|

在删除节点之前,请重置 kubeadm 安装的状态:

重置过程不会重置或清除 iptables 规则或 IPVS 表。如果你希望重置 iptables,则必须手动进行:

1

| iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

|

如果要重置 IPVS 表,则必须运行以下命令:

现在删除节点:

1

| kubectl delete node <node name>

|

如果你想重新开始,只需运行 kubeadm init 或 kubeadm join 并加上适当的参数。

附录

Rancher集群配置文件

cluster.yml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

|

nodes:

- address: 192.168.222.180

port: "22"

internal_address: 192.168.222.180

role:

- controlplane

- worker

- etcd

hostname_override: host180

user: root

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: ""

port: "22"

internal_address: ""

role:

- controlplane

hostname_override: ""

user: ubuntu

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: ""

port: "22"

internal_address: ""

role:

- controlplane

hostname_override: ""

user: ubuntu

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: null

retention: ""

creation: ""

backup_config: null

kube-api:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

service_cluster_ip_range: 10.43.0.0/16

service_node_port_range: ""

pod_security_policy: true

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

scheduler:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

kubelet:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

cluster_domain: cluster.local

infra_container_image: ""

cluster_dns_server: 10.43.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

network:

plugin: canal

options: {}

mtu: 0

node_selector: {}

update_strategy: null

tolerations: []

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/mirrored-coreos-etcd:v3.5.3

alpine: rancher/rke-tools:v0.1.80

nginx_proxy: rancher/rke-tools:v0.1.80

cert_downloader: rancher/rke-tools:v0.1.80

kubernetes_services_sidecar: rancher/rke-tools:v0.1.80

kubedns: rancher/mirrored-k8s-dns-node-cache:1.21.1

dnsmasq: rancher/mirrored-k8s-dns-dnsmasq-nanny:1.21.1

kubedns_sidecar: rancher/mirrored-k8s-dns-sidecar:1.21.1

kubedns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.5

coredns: rancher/mirrored-coredns-coredns:1.9.0

coredns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.5

nodelocal: rancher/mirrored-k8s-dns-node-cache:1.21.1

kubernetes: rancher/hyperkube:v1.23.6-rancher1

flannel: rancher/mirrored-coreos-flannel:v0.15.1

flannel_cni: rancher/flannel-cni:v0.3.0-rancher6

calico_node: rancher/mirrored-calico-node:v3.22.0

calico_cni: rancher/mirrored-calico-cni:v3.22.0

calico_controllers: rancher/mirrored-calico-kube-controllers:v3.22.0

calico_ctl: rancher/mirrored-calico-ctl:v3.22.0

calico_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.22.0

canal_node: rancher/mirrored-calico-node:v3.22.0

canal_cni: rancher/mirrored-calico-cni:v3.22.0

canal_controllers: rancher/mirrored-calico-kube-controllers:v3.22.0

canal_flannel: rancher/mirrored-flannelcni-flannel:v0.17.0

canal_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.22.0

weave_node: weaveworks/weave-kube:2.8.1

weave_cni: weaveworks/weave-npc:2.8.1

pod_infra_container: rancher/mirrored-pause:3.6

ingress: rancher/nginx-ingress-controller:nginx-1.2.0-rancher1

ingress_backend: rancher/mirrored-nginx-ingress-controller-defaultbackend:1.5-rancher1

ingress_webhook: rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.1.1

metrics_server: rancher/mirrored-metrics-server:v0.6.1

windows_pod_infra_container: rancher/mirrored-pause:3.6

aci_cni_deploy_container: noiro/cnideploy:5.1.1.0.1ae238a

aci_host_container: noiro/aci-containers-host:5.1.1.0.1ae238a

aci_opflex_container: noiro/opflex:5.1.1.0.1ae238a

aci_mcast_container: noiro/opflex:5.1.1.0.1ae238a

aci_ovs_container: noiro/openvswitch:5.1.1.0.1ae238a

aci_controller_container: noiro/aci-containers-controller:5.1.1.0.1ae238a

aci_gbp_server_container: noiro/gbp-server:5.1.1.0.1ae238a

aci_opflex_server_container: noiro/opflex-server:5.1.1.0.1ae238a

ssh_key_path: ~/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: null

enable_cri_dockerd: null

kubernetes_version: ""

private_registries: []

ingress:

provider: ""

options: {}

node_selector: {}

extra_args: {}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

update_strategy: null

http_port: 0

https_port: 0

network_mode: ""

tolerations: []

default_backend: null

default_http_backend_priority_class_name: ""

nginx_ingress_controller_priority_class_name: ""

default_ingress_class: null

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

win_prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

ignore_proxy_env_vars: false

monitoring:

provider: ""

options: {}

node_selector: {}

update_strategy: null

replicas: null

tolerations: []

metrics_server_priority_class_name: ""

restore:

restore: false

snapshot_name: ""

rotate_encryption_key: false

dns: null

|

CentOS7镜像

1

2

3

4

5

6

7

8

|

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum makecache

|

Docker

1

2

3

4

5

6

7

|

sudo mkdir /mnt/cdrom

sudo mount /dev/cdrom /mnt/cdrom/

sudo yum install -y yum-utils

sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

|

使用阿里云

1

2

3

4

5

6

7

8

|

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sudo sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

sudo yum makecache fast

|

配置 k8s 镜像

配置:/etc/yum.repos.d/kubernetes.repo

使用阿里云镜像

1

2

3

4

5

6

7

8

9

| cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

|

刷新

Docker镜像加速

手动修改

1

2

| mkdir /etc/docker

vim /etc/docker/daemon.json

|

写入

1

2

3

4

5

6

7

8

9

10

| {

"registry-mirrors": [

"https://aj2rgad5.mirror.aliyuncs.com",

"https://docker.mirrors.ustc.edu.cn",

"https://registry.cn-hangzhou.aliyuncs.com",

"https://registry.docker-cn.com",

"https://05f073ad3c0010ea0f4bc00b7105ec20.mirror.swr.myhuaweicloud.com"

],

"dns": ["8.8.8.8", "8.8.4.4"]

}

|

Spray安装后使用脚本

可以使用任意一个地址

1

2

3

4

5

6

7

8

9

10

11

12

13

|

export REGISTRY_MIRROR=https://registry.cn-hangzhou.aliyuncs.com

curl -sSL https://kuboard.cn/install-script/set_mirror.sh | sh -s ${REGISTRY_MIRROR}

systemctl restart kubelet

|

查看修改结果

本文地址: https://github.com/maxzhao-it/blog/post/72c978ad/