通过配置文件创建集群

1

| kubeadm init --config=kubeadm-config.yaml

|

配置文件内容

v1beta3文档

配置类型

一个 kubeadm 配置文件可以包含多个配置类型,使用三个破折号(“—”)分隔。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| apiVersion: kubeadm.k8s.io/v1beta3

kind: InitConfiguration

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: JoinConfiguration

|

打印 init 和 join 的默认值

1

2

3

4

| kubeadm config print init-defaults

kubeadm config print init-defaults --component-configs KubeletConfiguration

kubeadm config print init-defaults --component-configs KubeProxyConfiguration

kubeadm config print join-defaults

|

Kubeadm init 配置类型

使用 --config 选项执行 kubeadm init 时,可以使用以下配置类型:InitConfiguration、ClusterConfiguration、KubeProxyConfiguration、KubeletConfiguration,

但只有 InitConfiguration 和 ClusterConfiguration 之间的一种是强制性的。

InitConfiguration

用于配置运行时设置,在 kubeadm init 的情况下是 bootstrap toke 的配置以及特定于执行 kubeadm 的节点的所有设置,包括:

NodeRegistration,包含与将新节点注册到集群相关的字段;使用它来自定义节点名称、要使用的 CRI 套接字或应仅适用于该节点的任何其他设置(例如节点 ip)。LocalAPIEndpoint,表示要在该节点上部署的 API 服务器实例的端点;使用它,例如自定义 API 服务器广告地址。

ClusterConfiguration

用于配置集群范围的设置,包括以下设置:

Networking,保存集群网络拓扑的配置;使用它,例如自定义 pod 子网或服务子网。Etcd ;使用它,例如自定义本地 etcd 或配置 API 服务器以使用外部 etcd 集群。kube-apiserver、kube-scheduler、kube-controller-manager 配置;使用它通过添加自定义设置或覆盖 kubeadm 默认设置来自定义控制平面组件。

KubeProxyConfiguration

用于更改传递给集群中部署的 kube-proxy 实例的配置。如果未提供或仅部分提供此对象,kubeadm 将应用默认值。

kubernetes.io kube-proxy 官方文档

kubernetes.io kube-proxy 官方文档

KubeletConfiguration

用于更改将传递给集群中部署的所有 kubelet 实例的配置。如果未提供或仅部分提供此对象,kubeadm 将应用默认值。

kubernetes.io kubelet 官方文档

godoc.org/k8s.io kubelet 官方文档

Kubeadm join 配置类型

使用 --config 选项执行 kubeadm join 时,应提供 JoinConfiguration 类型。

JoinConfiguration 类型应该用于配置运行时设置,在 kubeadm join 的情况下,是用于访问集群信息的发现方法以及特定于执行 kubeadm 的节点的所有设置,包括:

NodeRegistration,包含与将新节点注册到集群相关的字段;使用它来自定义节点名称、要使用的 CRI 套接字或应仅适用于该节点的任何其他设置(例如节点 ip)。APIEndpoint,表示最终将部署在该节点上的 API 服务器实例的端点。

本次实例

完整示例及字段说明

host158 master

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| mkdir -p /etc/kubernetes/pki/etcd/

\cp /etc/certs/etcd/ca.pem /etc/kubernetes/pki/etcd/etcd-ca.crt

\cp /etc/certs/etcd/etcd-158.pem /etc/kubernetes/pki/etcd/etcd.crt

\cp /etc/certs/etcd/etcd-158-key.pem /etc/kubernetes/pki/etcd/etcd.key

# 生成 certificateKey

#默认证书 /etc/kubernetes/pki/ca.crt

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \

openssl dgst -sha256 -hex | sed 's/^.* //'

# vim ~/kubeadm-config-init.yaml

# 查看host中是否有映射

cat /etc/hosts

# 写入每个节点的hosts

cat >> /etc/hosts << EOF

192.168.2.158 host158

192.168.2.159 host159

192.168.2.160 host160

192.168.2.161 host161

192.168.2.240 host240

192.168.2.240 host241

192.168.2.158 master-158

192.168.2.159 master-159

192.168.2.160 master-160

192.168.2.161 node-160

EOF

echo "192.168.2.158 cluster.158" >> /etc/hosts

#使用自己的ca证书,InitConfiguration.skipPhasesz 跳过 certs/ca

mkdir -p /etc/kubernetes/pki/

\cp /etc/certs/etcd/ca.pem /etc/kubernetes/pki/ca.crt

\cp /etc/certs/etcd/ca-key.pem /etc/kubernetes/pki/ca.key

|

参考

pkg.go.dev/k8s.io v1beta3/types.go

github.com/kubernetes v1beta3/types.go

kubelet-config:KubeletConfiguration

写入配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

|

cat > ~/kubeadm-config-init.yaml <<EOF

apiVersion: kubeadm.k8s.io/v1beta3

kind: InitConfiguration

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: fk3wpg.gs0mcv4twx3tz2mc

ttl: 240h0m0s

usages:

- signing

- authentication

description: "描述设置了一个人性化的消息,为什么这个令牌存在以及它的用途"

nodeRegistration:

name: master-158

criSocket: unix:///var/run/containerd/containerd.sock

ignorePreflightErrors:

- IsPrivilegedUser

localAPIEndpoint:

advertiseAddress: 192.168.2.158

bindPort: 6443

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

clusterName: k8s-cluster

etcd:

external:

endpoints:

- "https://192.168.2.158:2379"

- "https://192.168.2.159:2379"

- "https://192.168.2.160:2379"

caFile: "/etc/certs/etcd/ca.pem"

certFile: "/etc/certs/etcd/etcd-158.pem"

keyFile: "/etc/certs/etcd/etcd-158-key.pem"

networking:

dnsDomain: cluster.158

serviceSubnet: 10.96.0.0/16

podSubnet: "10.244.0.0/16"

kubernetesVersion: 1.24.1

controlPlaneEndpoint: "192.168.2.158:6443"

apiServer:

extraArgs:

bind-address: 0.0.0.0

authorization-mode: "Node,RBAC"

timeoutForControlPlane: 4m0s

certSANs:

- "localhost"

- "cluster.158"

- "127.0.0.1"

- "master-158"

- "master-159"

- "master-160"

- "node-161"

- "10.96.0.1"

- "10.244.0.1"

- "192.168.2.158"

- "192.168.2.159"

- "192.168.2.160"

- "192.168.2.161"

- "host158"

- "host159"

- "host160"

- "host161"

controllerManager:

extraArgs:

bind-address: 0.0.0.0

scheduler:

extraArgs:

bind-address: 0.0.0.0

dns: {}

certificatesDir: /etc/kubernetes/pki

imageRepository: registry.aliyuncs.com/google_containers

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

bindAddress: 0.0.0.0

bindAddressHardFail: false

clientConnection:

acceptContentTypes: ""

burst: 0

contentType: ""

kubeconfig: /var/lib/kube-proxy/kubeconfig.conf

qps: 0

clusterCIDR: 10.244.0.0/16

configSyncPeriod: 2s

conntrack:

maxPerCore: null

min: null

tcpCloseWaitTimeout: 60s

tcpEstablishedTimeout: 2s

detectLocalMode: ""

detectLocal:

bridgeInterface: ""

interfaceNamePrefix: ""

enableProfiling: false

healthzBindAddress: "0.0.0.0:10256"

hostnameOverride: "kube-proxy-158"

ipvs:

excludeCIDRs: null

minSyncPeriod: 1m

scheduler: ""

strictARP: true

syncPeriod: 1m

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

metricsBindAddress: "127.0.0.1:10249"

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: null

portRange: ""

showHiddenMetricsForVersion: ""

udpIdleTimeout: 0s

winkernel:

enableDSR: false

forwardHealthCheckVip: false

networkName: ""

rootHnsEndpointName: ""

sourceVip: ""

EOF

|

创建集群

1

2

3

4

| kubeadm init --config=/root/kubeadm-config-init.yaml --v=5

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

|

其它操作

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

| # 执行结束后

# `host158`上生成加入节点的脚本:

kubeadm token create --print-join-command

# 查看步骤

kubeadm init --help

# 更新配置文件

kubeadm init phase upload-config all --config=/root/kubeadm-config-init.yaml --v=5

# 更新配置文件 kubeconfig

kubeadm init phase kubeconfig all --config=/root/kubeadm-config-init.yaml --v=5

# 更新 kube-proxy

kubeadm init phase addon kube-proxy --config=/root/kubeadm-config-init.yaml --v=5

# 查询 kubeadm 配置文件

kubectl describe cm -n kube-system kubeadm-config

kubectl get cm -n kube-system kubeadm-config -o yaml

kubectl describe cm -n kube-system kubelet-config

kubectl describe cm -n kube-system kube-proxy

kubectl get cm -n kube-system kube-proxy -o yaml

kubectl describe cm -n kube-system

# 编辑

kubectl edit cm -n kube-system kubeadm-config

kubectl edit cm -n kube-system kubelet-config

kubectl edit cm -n kube-system kube-proxy

# 更新 ConfigMap 内容到本地文件 /var/lib/kubelet/config.conf

kubeadm upgrade node phase kubelet-config --v=10

systemctl restart kubelet

kubeadm upgrade node phase control-plane --v=5

kubeadm upgrade node phase preflight --v=5

|

使用启动引导令牌

启动引导令牌的 Secret 格式

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| cat > bootstrap-token-fk3wpg.yaml << EOF

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-fk3wpg

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "启用 kubeadm init 时token ."

token-id: fk3wpg

token-secret: base64(gs0mcv4twx3tz2mc)

expiration: "2022-06-10T03:22:11Z"

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:worker,system:bootstrappers:ingress,system:bootstrappers:maxzhao-ca

EOF

kubectl apply -f bootstrap-token-fk3wpg.yaml

|

使用 kubeadm 管理令牌

被签名的 ConfigMap 是 kube-public 名字空间中的 cluster-info。 典型的工作流中,客户端在未经认证和忽略 TLS 报错的状态下读取这个 ConfigMap。 通过检查 ConfigMap 中嵌入的签名校验 ConfigMap 的载荷。

查看 kube-public cluster-info

1

| kubectl get configmap -n kube-public cluster-info -o yaml

|

自定义

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| cat > kube-public-cluster-info.yaml << EOF

apiVersion: v1

kind: ConfigMap

metadata:

creationTimestamp: "2022-06-01T08:02:52Z"

name: cluster-info

namespace: kube-public

data:

jws-kubeconfig-fk3wpg: eyJhbGciOiJIUzI1NiIsImtpZCI6IjA3NDAxYiJ9..tYEfbo6zDNo40MQE07aZcQX2m3EB2rO3NuXtxVMYm9U

kubeconfig: |

apiVersion: v1

clusters:

- cluster:

# cat ~/.kube/config

certificate-authority-data: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR3RENDQXFpZ0F3SUJBZ0lVTUh4Y1p0SFJwbVFpelViUElwLzl3Z2JtdjA4d0RRWUpLb1pJaHZjTkFRRUwKQlFBd2VERUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VwcFlXNW5JRk4xTVJBd0RnWURWUVFIRXdkTwpZVzVLYVc1bk1SVXdFd1lEVlFRS0V3eGxkR05rTFcxaGVIcG9ZVzh4RmpBVUJnTlZCQXNURFdWMFkyUWdVMlZqCmRYSnBkSGt4RlRBVEJnTlZCQU1UREdWMFkyUXRjbTl2ZEMxallUQWVGdzB5TWpBMU1qa3hOREl6TURCYUZ3MHkKTnpBMU1qZ3hOREl6TURCYU1IZ3hDekFKQmdOVkJBWVRBa05PTVJFd0R3WURWUVFJRXdoS2FXRnVaeUJUZFRFUQpNQTRHQTFVRUJ4TUhUbUZ1U21sdVp6RVZNQk1HQTFVRUNoTU1aWFJqWkMxdFlYaDZhR0Z2TVJZd0ZBWURWUVFMCkV3MWxkR05rSUZObFkzVnlhWFI1TVJVd0V3WURWUVFERXd4bGRHTmtMWEp2YjNRdFkyRXdnZ0VpTUEwR0NTcUcKU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLQW9JQkFRQzR2UVcxN0wxNkw3RWtYcFozTXJpSVhKek8ySTRyV2pMSQpKeDU1SFQ2czJFQjRQbE5DS3c4aFJtOWRjQVl2TVB6OEY1WVc4R3AxcWNLNk8vRW94T1grNkVXVzlYazh1RXQxCkxyZUUyUVo2b1JVenZTZFNXdllkRnhPUXM0VFBJcUNOcGFUNHgyZkFEU21sNDdaNWlkYnQrQ3M2ZEo0Z1Ftb2QKSkIxOWMvbDY3VnlwNmxPMWMwVWtNdFNod0xSZ2NFdGpKY1pmd0xPQXFibkRtYzg5Uks2cTh6b1AxMDhuZkwxbwpXZHBoREpsSVRHTmd6UTBGZU10aHZGUHJlU25EOEhWcHlqdjZtZ0pSZWlIWExhSWtEZ0tOQmh4UHRSdzdZb0pQCjNQSStuWkpBTVFOVW1nTWtoeC9mVGVzdW11VUs4RjI3R0N1b0VrNTZYRHJ0R042aEdGenhBZ01CQUFHalFqQkEKTUE0R0ExVWREd0VCL3dRRUF3SUJCakFQQmdOVkhSTUJBZjhFQlRBREFRSC9NQjBHQTFVZERnUVdCQlJISkVWWgp0WEl5RXpJaThndy80SFh0cTg3RllEQU5CZ2txaGtpRzl3MEJBUXNGQUFPQ0FRRUFHYzZoR0Vqd010TDVUeDVVCmxBTUZSTlprRHJlMGRrL2t4Rmlra01EOWIvdUxkYTB5TXRSeEhLUjZYUTNDV3pyNDVINGh1SHRjZXlrL3BzZTIKbzdvSXZySnFzbThJRHBJTVU4Wk9qSGpBdVE0TytRenBtOEpHSXQrNy9JelpkMG1iSW5KdWJZZ2dtZG5GUG5UdQowbFpyTG5JL04vZHA5eVNJUGVnY252QnREaHdUa0tkbkRLZEtLdFdaalRMa1crNmJGUDZSaTZBWmpuczc1cmpvClhEaUtyTGZRS2Y1RlJFbHRHL3N1YnhkNlFDWFNpWDVqUTZlSk9xR1d1UkpRRHovWlZoWGhvdWFWaTVWOGpuRWcKNkVJZWxtR1A5QWRJaE4raFVMYTJZclRLMDJ4OTk3TlBQRnpDMm55NWV3VlFYdzZlNTE1QWU2S3BLUnZBcEE2RQplQWdwTVE9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg=="

server: https://192.168.2.158:6443

name: ""

contexts: []

current-context: ""

kind: Config

preferences: {}

users: []

EOF

kube-public-cluster-info.yaml

|

host159加入集群

证书

1

2

3

|

ssh root@192.168.2.159 "mkdir -p /etc/kubernetes/pki"

scp -r /etc/kubernetes/pki/* root@192.168.2.159:/etc/kubernetes/pki

|

加入

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

| cat > /root/kubeadm-config-join.yaml <<EOF

apiVersion: kubeadm.k8s.io/v1beta3

kind: JoinConfiguration

nodeRegistration:

name: master-159

criSocket: unix:///var/run/containerd/containerd.sock

ignorePreflightErrors:

- IsPrivilegedUser

imagePullPolicy: IfNotPresent

caCertPath: "/etc/kubernetes/pki/ca.crt"

discovery:

bootstrapToken:

token: fk3wpg.gs0mcv4twx3tz2mc

apiServerEndpoint: 192.168.2.158:6443

caCertHashes:

- sha256:011acbb00e4983761f3cbe774f45477b75a99a42d14004f72c043ffbb6e5b025

unsafeSkipCAVerification: false

tlsBootstrapToken: fk3wpg.gs0mcv4twx3tz2mc

timeout: 4m0s

controlPlane:

localAPIEndpoint:

advertiseAddress: 192.168.2.159

bindPort: 6443

certificateKey: 011acbb00e4983761f3cbe774f45477b75a99a42d14004f72c043ffbb6e5b025

EOF

kubeadm join --config=/root/kubeadm-config-join.yaml --v=10

|

配置和校验

1

2

3

4

| mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get nodes

|

host160加入集群

证书

1

2

3

|

ssh root@192.168.2.160 "mkdir -p /etc/kubernetes/pki"

scp -r /etc/kubernetes/pki/* root@192.168.2.160:/etc/kubernetes/pki

|

加入

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

| cat > /root/kubeadm-config-join.yaml <<EOF

apiVersion: kubeadm.k8s.io/v1beta3

kind: JoinConfiguration

nodeRegistration:

name: master-160

criSocket: unix:///var/run/containerd/containerd.sock

taints: null

ignorePreflightErrors:

- IsPrivilegedUser

imagePullPolicy: IfNotPresent

discovery:

bootstrapToken:

token: fk3wpg.gs0mcv4twx3tz2mc

apiServerEndpoint: 192.168.2.158:6443

unsafeSkipCAVerification: true

tlsBootstrapToken: fk3wpg.gs0mcv4twx3tz2mc

timeout: 4m0s

controlPlane:

localAPIEndpoint:

advertiseAddress: 192.168.2.160

bindPort: 6443

certificateKey: 011acbb00e4983761f3cbe774f45477b75a99a42d14004f72c043ffbb6e5b025

EOF

kubeadm join --config=/root/kubeadm-config-join.yaml --v=10

|

配置和校验

1

2

3

4

| mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get nodes

|

host161加入集群

1

2

| kubeadm join 192.168.2.158:6443 --node-name node-161 --token fk3wpg.gs0mcv4twx3tz2mc \

--discovery-token-ca-cert-hash sha256:011acbb00e4983761f3cbe774f45477b75a99a42d14004f72c043ffbb6e5b025

|

导出kubeadm 配置文件

1

2

3

4

| kubectl describe cm -n kube-system kubeadm-config > ./kubeadm-config.yaml

kubectl describe cm -n kube-system kubelet-config > ./kubelet-config.yaml

#更新配置文件

kubeadm init phase kubeconfig all --config=./kubeadm-config.yaml --v=5

|

kubelet 配置

- 用于 TLS 引导程序的 KubeConfig 文件为

/etc/kubernetes/bootstrap-kubelet.conf, 但仅当 /etc/kubernetes/kubelet.conf 不存在时才能使用。

- 具有唯一 kubelet 标识的 KubeConfig 文件为

/etc/kubernetes/kubelet.conf。

- 包含 kubelet 的组件配置的文件为

/var/lib/kubelet/config.yaml。

- 包含的动态环境的文件

KUBELET_KUBEADM_ARGS 是来源于 /var/lib/kubelet/kubeadm-flags.env。

- 包含用户指定标志替代的文件

KUBELET_EXTRA_ARGS 是来源于 /etc/default/kubelet(对于 DEB),或者 /etc/sysconfig/kubelet(对于 RPM)。 KUBELET_EXTRA_ARGS 在标志链中排在最后,并且在设置冲突时具有最高优先级

KubeProxy 配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

| apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

bindAddress: 0.0.0.0

bindAddressHardFail: false

clientConnection:

acceptContentTypes: ""

burst: 0

contentType: ""

kubeconfig: /var/lib/kube-proxy/kubeconfig.conf

qps: 0

clusterCIDR: 10.244.0.0/16

configSyncPeriod: 2s

conntrack:

maxPerCore: null

min: null

tcpCloseWaitTimeout: 60s

tcpEstablishedTimeout: 2s

detectLocalMode: ""

detectLocal:

bridgeInterface: ""

interfaceNamePrefix: ""

enableProfiling: false

healthzBindAddress: "0.0.0.0:10256"

hostnameOverride: "kube-proxy-158"

ipvs:

excludeCIDRs: null

minSyncPeriod: 1m

scheduler: ""

strictARP: true

syncPeriod: 1m

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

metricsBindAddress: "127.0.0.1:10249"

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: null

portRange: ""

showHiddenMetricsForVersion: ""

udpIdleTimeout: 0s

winkernel:

enableDSR: false

forwardHealthCheckVip: false

networkName: ""

rootHnsEndpointName: ""

sourceVip: ""

|

官方示例

这是一个完整的示例,其中包含要在 kubeadm init 运行期间使用的多个配置类型的单个 YAML 文件。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

| apiVersion: kubeadm.k8s.io/v1beta3

kind: InitConfiguration

bootstrapTokens:

- token: "9a08jv.c0izixklcxtmnze7"

description: "kubeadm bootstrap token"

ttl: "24h"

- token: "783bde.3f89s0fje9f38fhf"

description: "another bootstrap token"

usages:

- authentication

- signing

groups:

- system:bootstrappers:kubeadm:default-node-token

nodeRegistration:

name: "ec2-10-100-0-1"

criSocket: "unix:///var/run/containerd/containerd.sock"

taints:

- key: "kubeadmNode"

value: "someValue"

effect: "NoSchedule"

kubeletExtraArgs:

v: 4

ignorePreflightErrors:

- IsPrivilegedUser

imagePullPolicy: "IfNotPresent"

localAPIEndpoint:

advertiseAddress: "10.100.0.1"

bindPort: 6443

certificateKey: "e6a2eb8581237ab72a4f494f30285ec12a9694d750b9785706a83bfcbbbd2204"

skipPhases:

- addon/kube-proxy

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

etcd:

local:

imageRepository: "k8s.gcr.io"

imageTag: "3.2.24"

dataDir: "/var/lib/etcd"

extraArgs:

listen-client-urls: "http://10.100.0.1:2379"

serverCertSANs:

- "ec2-10-100-0-1.compute-1.amazonaws.com"

peerCertSANs:

- "10.100.0.1"

networking:

serviceSubnet: "10.96.0.0/16"

podSubnet: "10.244.0.0/16"

dnsDomain: "cluster.local"

kubernetesVersion: "v1.21.0"

controlPlaneEndpoint: "10.100.0.1:6443"

apiServer:

extraArgs:

authorization-mode: "Node,RBAC"

extraVolumes:

- name: "some-volume"

hostPath: "/etc/some-path"

mountPath: "/etc/some-pod-path"

readOnly: false

pathType: FileOrCreate

certSANs:

- "10.100.1.1"

- "ec2-10-100-0-1.compute-1.amazonaws.com"

timeoutForControlPlane: 4m0s

controllerManager:

extraArgs:

"node-cidr-mask-size": "20"

extraVolumes:

- name: "some-volume"

hostPath: "/etc/some-path"

mountPath: "/etc/some-pod-path"

readOnly: false

pathType: FileOrCreate

scheduler:

extraArgs:

address: "10.100.0.1"

extraVolumes:

- name: "some-volume"

hostPath: "/etc/some-path"

mountPath: "/etc/some-pod-path"

readOnly: false

pathType: FileOrCreate

certificatesDir: "/etc/kubernetes/pki"

imageRepository: "k8s.gcr.io"

clusterName: "example-cluster"

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

|

配置文件

kubelet 配置

/var/lib/kubelet/config.yaml

master-158

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

| cat > /var/lib/kubelet/config.yaml << EOF

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

enableServer: true

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m

cacheUnauthorizedTTL: 10s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.158

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

logging:

format: "text"

flushFrequency: 1000

options:

json:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

staticPodPath: /etc/kubernetes/manifests

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

EOF

|

/etc/kubernetes/kubelet.conf

master-158

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| cat > /etc/kubernetes/kubelet.conf << EOF

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUMvakNDQWVhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeU1EVXlNakl4TkRRd04xb1hEVE15TURVeE9USXhORFF3TjFvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTUFMCjRkN2xWekIwZnlhazYrcFdaaGJiNzBqOWFqa3NveXkrTnIvaWYzV2RxVHRXNUNHZVcyRzEwRldaenNLd3RUWnoKYjRTSGRBV21LL1dTNUJocWVEeFVENVNPcTVXNXpqUHZFNWpGanlONThlN0RVM1gzS0NJSGcxbXUyVnRQSzZWWQpob2RISHJIaXBEM3lOalBQTk1ERzRGU1V3NUFMWmRsRTFLV3VMdWVlWHhnbEd5WlRodHFBUkU1N1Q4MUFNMm5zCkN1cmI5N3AwKyt1L3pnZHdDRG1iWmtWYUFrTUM3MjB3cTdsUWs2UnpLbHVHMHJreVVLb0lQTk9YTkhxby9UdXMKNUh1ditFby9SbGo4MUlyMkh2OUE1dCt4YUo0SDB6NS9GajQxNnAwQ0xENjBrWi9YQlJoNE5MTC9EYkNQQll6cgorUzhwbEQ2Y0VRMi8xellSeWI4Q0F3RUFBYU5aTUZjd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZEL2EzQ0JYT2h1Y2hrYzNkY3JhR291TDNLYzNNQlVHQTFVZEVRUU8KTUF5Q0NtdDFZbVZ5Ym1WMFpYTXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBSStQdnZPK2VnMk5idUIrUUN2aQo3RktGUjJzOUQzSGxRNWtsRVZTbWJvSGRsN2Z5dkZhTUNPLzVQSk56cWUwRDNOL3F0MlJsWk43UEw0Q2VNaVFrCnM5Nk8zS2U4WVkrRmRUeXFJTW9MN2FKODNDTjE2NFR2S29Ld3JmL1pVaHZkQW1xS2NhM3I5blNGcjZreXVPZVAKV2cxMGlxcDMzS3ZqQjlJVFJBckxscDdRWm5JdmhMOVRVUk9YOW84amJvTVpjbU1QbG1lOEppRDcySkYzS0diUQpOMEJoTDYrVHo4RkJWSkNJYW9BRVQrQXlBdVFKdTl6cjMvd2FsZk9PcEdTSlhENmpjWGVsMldRVVFGQTJjUEpICndLSEtKNkRSL3pwKzNlTVRDWnhLMmloV2oyT0ZNbkV2UE9TcDZ5M0VtNmxmd0lXMktKSjVjakhnWTZOUTN1UWEKUUFBPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.2.158:6443

name: cluster.158

contexts:

- context:

cluster: cluster.158

user: system:node:master-158

name: system:node:master-158@cluster.158

current-context: system:node:master-158@cluster.158

kind: Config

preferences: {}

users:

- name: system:node:master-158

user:

client-certificate: /var/lib/kubelet/pki/kubelet-client-current.pem

client-key: /var/lib/kubelet/pki/kubelet-client-current.pem

EOF

|

/var/lib/kubelet/pki/kubelet-client-current.pem

1

2

3

4

5

| cat > /var/lib/kubelet/pki/kubelet-client-current.pem << EOF

EOF

|

附

错误处理

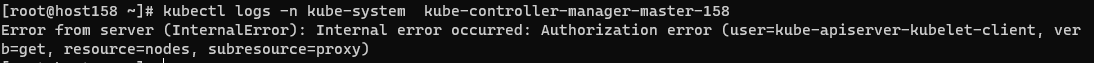

join 时的 JWS 问题

1

| The cluster-info ConfigMap does not yet contain a JWS signature for token ID "j2lxkq", will try again

|

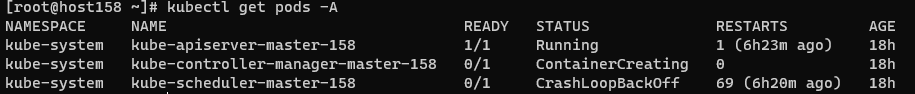

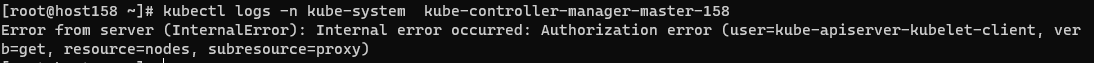

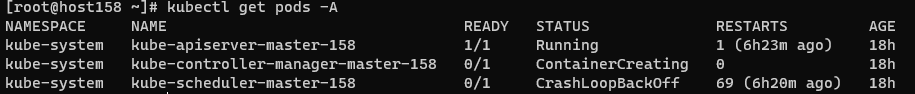

这里 kubeadm token list 可以看到 token 都很正常。

cluster info中的 JWS 需要在kube-controller-manager运行后创建。

1

2

3

4

|

kubectl describe -n kube-system kube-controller-manager-master-158

kubectl logs -n kube-system kube-controller-manager-master-158

kubectl logs -n kube-system kube-controller-manager-master-158 --v=10

|

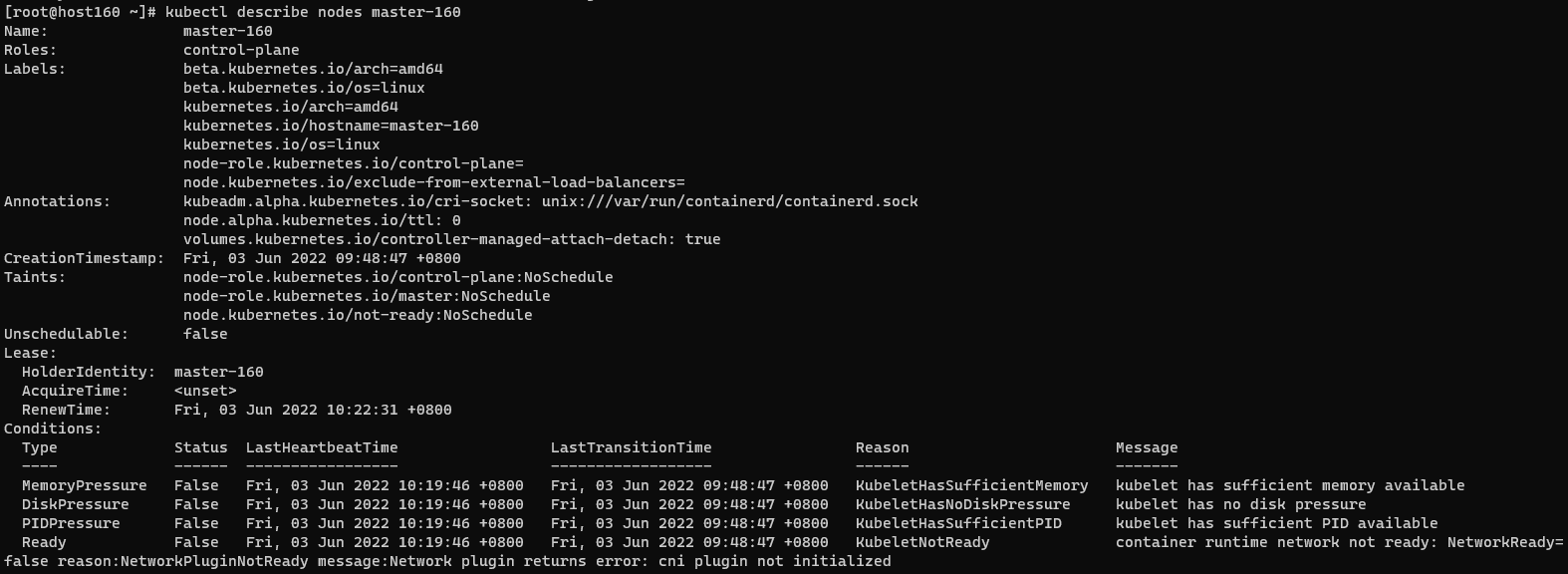

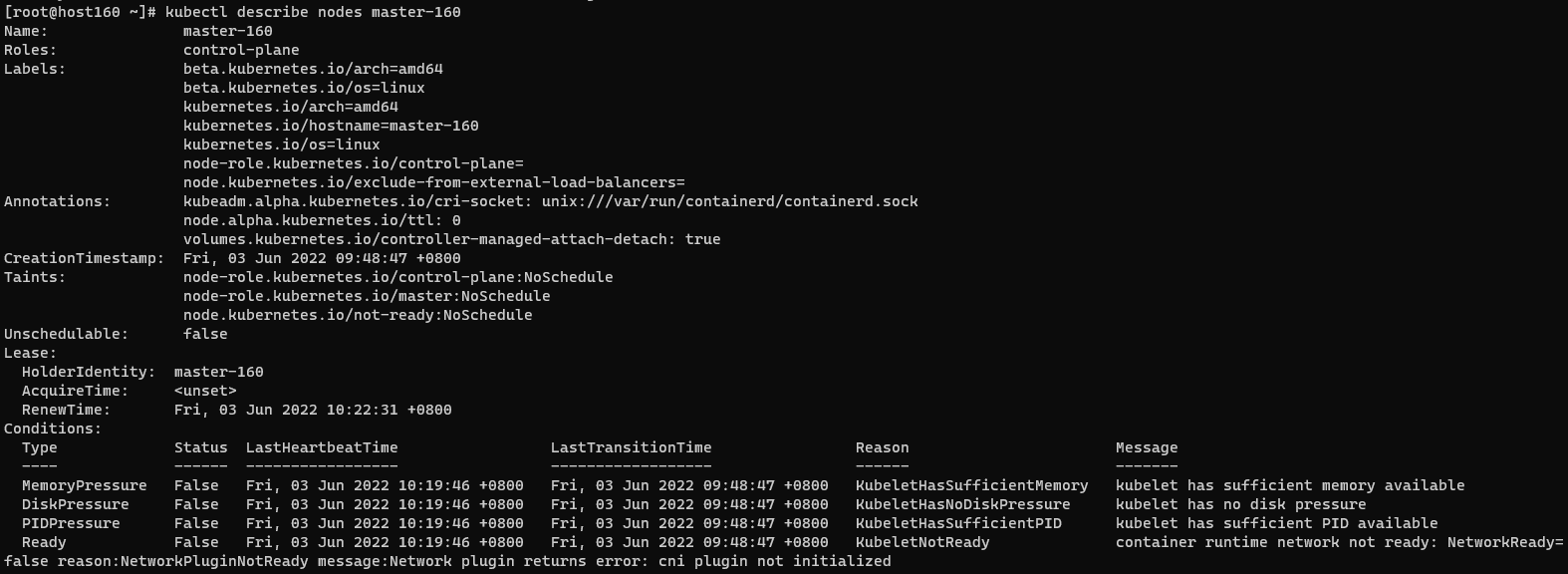

节点NotReady

1

| kubectl describe nodes master-160

|

cni plugin not initialized

1

| sudo systemctl restart containerd

|

卸载集群

1

2

3

4

5

6

7

8

9

10

11

12

|

kubectl config delete-cluster k8s-cluster

echo "y" | kubeadm reset

rm -rf /etc/kubernetes/

rm -rf $HOME/.kube/config

rm -rf /var/lib/kubelet/

systemctl restart kubelet

|

生成 token

一键生成

1

| kubeadm token create --print-join-command --ttl=240h

|

生成 certificateKey

安装etcd集群讲到 etcd-ca.crt

1

2

3

| kubeadm token create

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \

openssl dgst -sha256 -hex | sed 's/^.* //'

|

完整示例

host158 master

1

2

3

4

5

6

7

8

9

| mkdir -p /etc/kubernetes/pki/etcd/

#\cp /etc/certs/etcd/ca.pem /etc/kubernetes/pki/ca.crt

\cp /etc/certs/etcd/etcd-158.pem /etc/kubernetes/pki/etcd/etcd.crt

\cp /etc/certs/etcd/etcd-158-key.pem /etc/kubernetes/pki/etcd/etcd.key

# 生成 certificateKey

#默认证书 /etc/kubernetes/pki/ca.crt

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \

openssl dgst -sha256 -hex | sed 's/^.* //'

vim ~/kubeadm-config-init.yaml

|

参考

pkg.go.dev/k8s.io v1beta3/types.go

github.com/kubernetes v1beta3/types.go

kubelet-config:KubeletConfiguration

写入

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

| apiVersion: kubeadm.k8s.io/v1beta3

kind: InitConfiguration

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: fk3wpg.gs0mcv4twx3tz2mc

ttl: 240h0m0s

usages:

- signing

- authentication

description: "描述设置了一个人性化的消息,为什么这个令牌存在以及它的用途"

nodeRegistration:

name: master-158

criSocket: unix:///var/run/containerd/containerd.sock

taints: []

kubeletExtraArgs:

v: 4

ignorePreflightErrors:

- IsPrivilegedUser

imagePullPolicy: IfNotPresent

localAPIEndpoint:

advertiseAddress: 192.168.2.158

bindPort: 6443

certificateKey: "011acbb00e4983761f3cbe774f45477b75a99a42d14004f72c043ffbb6e5b025"

skipPhases: []

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

clusterName: k8s-cluster

etcd:

external:

endpoints:

- "https://192.168.2.158:2379"

- "https://192.168.2.159:2379"

- "https://192.168.2.160:2379"

caFile: "/etc/kubernetes/pki/etcd/etcd-ca.crt"

certFile: "/etc/kubernetes/pki/etcd/etcd.crt"

keyFile: "/etc/kubernetes/pki/etcd/etcd.key"

networking:

dnsDomain: cluster.158

serviceSubnet: 10.96.0.0/16

podSubnet: "10.244.0.0/16"

kubernetesVersion: 1.24.1

controlPlaneEndpoint: "192.168.2.158:6443"

apiServer:

extraArgs:

authorization-mode: "Node,RBAC"

extraVolumes:

- name: "some-volume"

hostPath: "/etc/some-path"

mountPath: "/etc/some-pod-path"

readOnly: false

pathType: FileOrCreate

timeoutForControlPlane: 4m0s

controllerManager:

extraArgs:

"node-cidr-mask-size": "20"

extraVolumes:

- name: "some-volume"

hostPath: "/etc/some-path"

mountPath: "/etc/some-pod-path"

readOnly: false

pathType: FileOrCreate

scheduler:

extraArgs:

address: "192.168.2.158"

extraVolumes:

- name: "kube-config"

hostPath: "/etc/kubernetes/scheduler.conf"

mountPath: "/etc/some-pod-path"

readOnly: false

pathType: FileOrCreate

- name: "kube-config"

hostPath: "/etc/kubernetes/kubescheduler-config.yaml"

mountPath: "/etc/some-pod-path"

readOnly: false

pathType: FileOrCreate

dns: {}

certificatesDir: /etc/kubernetes/pki

imageRepository: registry.aliyuncs.com/google_containers

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

enableServer: true

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/etcd/etcd-ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

healthzBindAddress: 192.168.2.158

healthzPort: 10248

logging:

format: "text"

flushFrequency: 1000

options:

json:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

staticPodPath: /etc/kubernetes/manifests

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

bindAddress: 0.0.0.0

bindAddressHardFail: false

clientConnection:

acceptContentTypes: ""

burst: 0

contentType: ""

kubeconfig: /var/lib/kube-proxy/kubeconfig.conf

qps: 0

clusterCIDR: ""

configSyncPeriod: 2s

conntrack:

maxPerCore: null

min: null

tcpCloseWaitTimeout: 60s

tcpEstablishedTimeout: 2s

detectLocalMode: ""

detectLocal:

bridgeInterface: ""

interfaceNamePrefix: ""

enableProfiling: false

healthzBindAddress: "0.0.0.0:10256"

hostnameOverride: "kube-proxy-158"

iptables:

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 1m

syncPeriod: 1m

ipvs:

excludeCIDRs: null

minSyncPeriod: 1m

scheduler: ""

strictARP: true

syncPeriod: 1m

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

metricsBindAddress: "127.0.0.1:10249"

mode: "ipvs"

nodePortAddresses: null

portRange: "0-0"

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: JoinConfiguration

nodeRegistration:

name: master-158

criSocket: unix:///var/run/containerd/containerd.sock

taints: null

kubeletExtraArgs:

v: 4

ignorePreflightErrors:

- IsPrivilegedUser

imagePullPolicy: IfNotPresent

caCertPath: "/etc/kubernetes/pki/etcd/etcd-ca.crt"

discovery:

bootstrapToken:

token: fk3wpg.gs0mcv4twx3tz2mc

apiServerEndpoint: 192.168.2.158:6443

caCertHashes: sha256:011acbb00e4983761f3cbe774f45477b75a99a42d14004f72c043ffbb6e5b025

unsafeSkipCAVerification: false

tlsBootstrapToken: fk3wpg.gs0mcv4twx3tz2mc

timeout: 4m0s

controlPlane:

localAPIEndpoint:

advertiseAddress: 192.168.2.158

bindPort: 6443

certificateKey: 011acbb00e4983761f3cbe774f45477b75a99a42d14004f72c043ffbb6e5b025

skipPhases:

- addon/kube-proxy

|

本文地址: https://github.com/maxzhao-it/blog/post/cdb1e23h/