前言 这里使用 kubebetes + containerd.io

dashborad 没有成功

我这里有

192.168.2.158 master-158192.168.2.159 master-159192.168.2.160 master-160192.168.2.161 node-161192.168.2.240 nfs

可能需要:

安装etcd集群

安装docker 或 containerd(下文有)

系统配置 检查端口 1 2 3 su root sudo yum install -y netcat nc 127.0.0.1 6443

虚拟机需要挂载ISO镜像

关闭防火墙 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 sudo systemctl stop firewalld sudo systemctl disable firewalld setenforce 0 sed -i 's/enforcing/disabled/' /etc/selinux/config swapoff -a sed -ri 's/.*swap.*/#&/' /etc/fstab cat >> /etc/hosts << EOF 192.168.2.158 host158 192.168.2.159 host159 192.168.2.160 host160 192.168.2.161 host161 192.168.2.240 host240 192.168.2.240 host241 192.168.2.158 master-158 192.168.2.159 master-159 192.168.2.160 master-160 192.168.2.161 node-161 EOF cat /etc/hosts

Docker 镜像1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 sudo mkdir /mnt/cdrom sudo mount /dev/cdrom /mnt/cdrom/ sudo yum install -y yum-utils sudo yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo sudo yum-config-manager \ --add-repo \ http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sudo yum-config-manager \ --add-repo \ https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/centos/docker-ce.repo

配置 k8s 镜像 配置:/etc/yum.repos.d/kubernetes.repo

使用阿里云镜像

1 2 3 4 5 6 7 8 9 cat > /etc/yum.repos.d/kubernetes.repo <<EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

刷新

或者使用华为云镜像

1 2 3 4 5 6 7 8 9 10 cat > /etc/yum.repos.d/kubernetes.repo <<EOF [kubernetes] name=Kubernetes baseurl=https://repo.huaweicloud.com/kubernetes/yum/repos/kubernetes-el7-$basearch enabled=1 gpgcheck=1 repo_gpgcheck=0 gpgkey=https://repo.huaweicloud.com/kubernetes/yum/doc/yum-key.gpg https://repo.huaweicloud.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

Kubeadm 安装万事不决,重启解决,解决不了,删掉重搞

1 2 3 4 5 6 7 8 systemctl restart kubelet systemctl restart containerd kubeadm init phase upload-config all --config=/root/kubeadm-config-init.yaml --v=5 kubeadm init phase kubeconfig all --config=/root/kubeadm-config-init.yaml --v=5 kubeadm init phase addon kube-proxy --config=/root/kubeadm-config-init.yaml --v=5

一、安装依赖 k8s 依赖1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 sudo yum remove -y docker-ce docker-ce-cli docker-compose-plugin sudo yum remove -y kubelet kubeadm kubectl sudo setenforce 0 sed -i 's/enforcing/disabled/' /etc/selinux/config sudo yum install -y kubelet kubeadm kubectl sudo systemctl daemon-reload sudo systemctl disable kubelet sudo systemctl enable kubelet sudo systemctl start kubelet sudo systemctl status kubelet -l kubectl version --client kubectl version --client --output=yaml

kubelet 节点通信kubeadm 自动化部署工具kubectl 集群管理工具

Linux 官方推荐方式

ipvs 依赖1 2 3 4 5 6 7 8 9 10 sudo yum install -y ipset ipvsadm lsmod|grep ip_vs modprobe ip_vs modprobe ip_vs_rr modprobe ip_vs_wrr modprobe ip_vs_sh modprobe nf_conntrack_ipv4

安装 kubectl 命令补全工具(可选) 1 2 3 4 5 6 7 8 9 10 11 sudo yum install -y bash-completion cat /usr/share/bash-completion/bash_completiontype _init_completionsource /usr/share/bash-completion/bash_completionsudo kubectl completion bash | sudo tee /etc/bash_completion.d/kubectl > /dev/null echo 'alias k=kubectl' >>~/.bashrcecho 'complete -F __start_kubectl k' >>~/.bashrc

二、安装kubeadm 全部节点

允许 iptables 检查桥接流量 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 lsmod | grep br_netfilter sudo modprobe br_netfilter echo "1" > /proc/sys/net/bridge/bridge-nf-call-iptablescat <<EOF | sudo tee /etc/modules-load.d/k8s.conf br_netfilter EOF cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sudo sysctl --system

确保MAC地址和product_uuid的唯一

你可以使用命令 ip link 或 ifconfig -a 来获取网络接口的 MAC 地址

可以使用 sudo cat /sys/class/dmi/id/product_uuid 命令对 product_uuid 校验

三、容器运行时 全部节点

安装containerd 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 swapoff -a sed -ri 's/.*swap.*/#&/' /etc/fstab cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter lsmod | grep br_netfilter cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF sudo sysctl --system

安装 Containerd.io

配置Docker 镜像

1 2 3 4 5 6 7 8 9 10 11 sudo yum install -y containerd.io sudo mkdir -p /etc/containerd containerd config default | sudo tee /etc/containerd/config.toml sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml sudo cat /etc/containerd/config.toml |grep SystemdCgroup sudo systemctl restart containerd sudo systemctl enable containerd sudo systemctl status containerd

github

结果:

1 2 3 4 [plugins."io.containerd.grpc.v1.cri" .containerd.runtimes.runc] [plugins."io.containerd.grpc.v1.cri" .containerd.runtimes.runc.options] SystemdCgroup = true

当使用 kubeadmkubelet 的 cgroup 驱动

四、创建集群 CA证书(可以跳过)生成CA证书

路径

默认 CN

描述

ca.crt,key

kubernetes-ca

Kubernetes 通用 CA

etcd/ca.crt,key

etcd-ca

与 etcd 相关的所有功能

front-proxy-ca.crt,key

kubernetes-front-proxy-ca

用于 前端代理

上面的 CA 之外,还需要获取用于服务账户管理的密钥对,也就是 sa.key 和 sa.pub。

下面的例子说明了上表中所示的 CA 密钥和证书文件。

1 2 3 4 5 6 /etc/kubernetes/pki/ca.crt /etc/kubernetes/pki/ca.key /etc/kubernetes/pki/etcd/ca.crt /etc/kubernetes/pki/etcd/ca.key /etc/kubernetes/pki/front-proxy-ca.crt /etc/kubernetes/pki/front-proxy-ca.key

PKI证书和要求

etcd集群(含CA)安装etcd集群(含CA)

列出镜像版本 1 2 kubeadm config images list kubeadm config images pull

配置文件创建master 节点(强建议)

通过配置文件创建集群节点

写入配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 cat > ~/kubeadm-config-init.yaml <<EOF apiVersion: kubeadm.k8s.io/v1beta3 kind: InitConfiguration bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: fk3wpg.gs0mcv4twx3tz2mc ttl: 240h0m0s usages: - signing - authentication description: "描述设置了一个人性化的消息,为什么这个令牌存在以及它的用途" # NodeRegistration 包含与将新控制平面节点注册到集群相关的字段 nodeRegistration: name: master-158 criSocket: unix:///var/run/containerd/containerd.sock ignorePreflightErrors: - IsPrivilegedUser # LocalAPIEndpoint表示部署在这个控制平面节点上的API服务器实例的端点。 localAPIEndpoint: advertiseAddress: 192.168.2.158 bindPort: 6443 --- apiVersion: kubeadm.k8s.io/v1beta3 kind: ClusterConfiguration clusterName: k8s-cluster etcd: external: endpoints: - "https://192.168.2.158:2379" - "https://192.168.2.159:2379" - "https://192.168.2.160:2379" caFile: "/etc/certs/etcd/ca.pem" certFile: "/etc/certs/etcd/etcd-158.pem" keyFile: "/etc/certs/etcd/etcd-158-key.pem" # 网络持有集群的网络拓扑结构的配置 networking: dnsDomain: cluster.158 serviceSubnet: 10.96.0.0/16 podSubnet: "10.244.0.0/16" kubernetesVersion: 1.24.1 controlPlaneEndpoint: "192.168.2.158:6443" # APIServer 包含 API 服务器控制平面组件的额外设置 apiServer: extraArgs: bind-address: 0.0.0.0 authorization-mode: "Node,RBAC" #service-cluster-ip-range: 10.96.0.0/16 #service-node-port-range: 30000-32767 timeoutForControlPlane: 4m0s certSANs: - "localhost" - "cluster.158" - "127.0.0.1" - "master-158" - "master-159" - "master-160" - "node-161" - "10.96.0.1" - "10.244.0.1" - "192.168.2.158" - "192.168.2.159" - "192.168.2.160" - "192.168.2.161" - "host158" - "host159" - "host160" - "host161" # 包含控制器管理器控制平面组件的额外设置 controllerManager: extraArgs: bind-address: 0.0.0.0 #"node-cidr-mask-size": "20" # 这里与 KubeProxyConfiguration.clusterCIDR 一致 #cluster-cidr: 10.244.0.0/16 #service-cluster-ip-range: 10.96.0.0/16 #config: /etc/kubernetes/scheduler-config.yaml #extraVolumes: # - name: schedulerconfig # hostPath: /home/johndoe/schedconfig.yaml # mountPath: /etc/kubernetes/scheduler-config.yaml # readOnly: true # pathType: "File" # 调度程序包含调度程序控制平面组件的额外设置 scheduler: extraArgs: bind-address: 0.0.0.0 #config: /etc/kubernetes/kubescheduler-config.yaml #extraVolumes: # - hostPath: /etc/kubernetes/kubescheduler-config.yaml # mountPath: /etc/kubernetes/kubescheduler-config.yaml # name: kubescheduler-config # readOnly: true # DNS 定义集群中安装的 DNS 插件的选项。 dns: {} certificatesDir: /etc/kubernetes/pki #imageRepository: k8s.gcr.io imageRepository: registry.aliyuncs.com/google_containers #用户启用的 FeatureGates。 #featureGates: --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration bindAddress: 0.0.0.0 bindAddressHardFail: false clientConnection: acceptContentTypes: "" burst: 0 contentType: "" kubeconfig: /var/lib/kube-proxy/kubeconfig.conf qps: 0 clusterCIDR: 10.244.0.0/16 configSyncPeriod: 2s conntrack: maxPerCore: null min: null tcpCloseWaitTimeout: 60s tcpEstablishedTimeout: 2s # 默认 LocalModeClusterCIDR detectLocalMode: "" detectLocal: bridgeInterface: "" interfaceNamePrefix: "" enableProfiling: false healthzBindAddress: "0.0.0.0:10256" hostnameOverride: "kube-proxy-158" ipvs: excludeCIDRs: null minSyncPeriod: 1m scheduler: "" strictARP: true syncPeriod: 1m tcpFinTimeout: 0s tcpTimeout: 0s udpTimeout: 0s metricsBindAddress: "127.0.0.1:10249" mode: "ipvs" nodePortAddresses: null oomScoreAdj: null portRange: "" showHiddenMetricsForVersion: "" udpIdleTimeout: 0s winkernel: enableDSR: false forwardHealthCheckVip: false networkName: "" rootHnsEndpointName: "" sourceVip: "" EOF

创建

1 kubeadm init --config=kubeadm-config-init.yaml --v=5

直接创建 master节点(不建议) 1 2 3 4 5 6 7 8 9 10 11 12 sudo kubeadm init \ --node-name master-158 \ --apiserver-advertise-address=192.168.2.158 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.24.1 \ --service-cidr=10.96.0.0/16 \ --pod-network-cidr=10.244.0.0/16

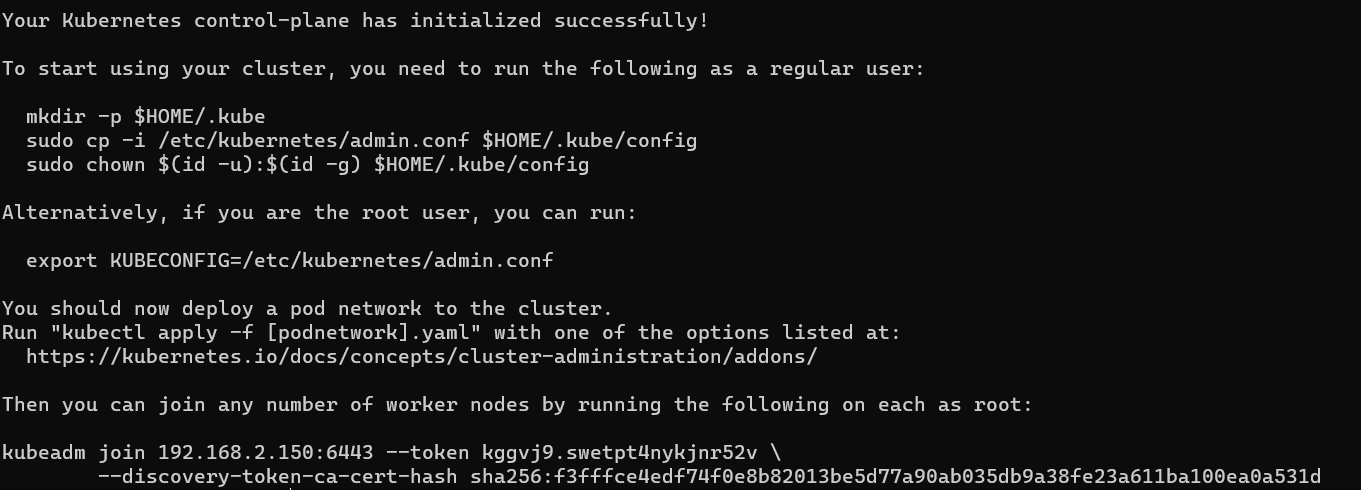

非Root用户运行kubectl配置 1 2 3 4 5 6 mkdir -p $HOME /.kubesudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/config sudo chown $(id -u):$(id -g) $HOME /.kube/config export KUBECONFIG=/etc/kubernetes/admin.conf

加入当前集群 host158上生成加入节点的脚本:1 kubeadm token create --print-join-command

host159加入证书

1 2 3 ssh root@192.168.2.159 "mkdir -p /etc/kubernetes/pki" scp -r /etc/kubernetes/pki/* root@192.168.2.159:/etc/kubernetes/pki

加入

1 2 3 4 kubeadm join 192.168.2.158:6443 --node-name master-159 --token fk3wpg.gs0mcv4twx3tz2mc \ --discovery-token-ca-cert-hash sha256:533e488affe662b66310ed8a353b1fba17c354e4c0df5285b95a01a33e986219 \ --control-plane --v=5

配置和校验

1 2 3 4 mkdir -p $HOME /.kubesudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/config sudo chown $(id -u):$(id -g) $HOME /.kube/config kubectl get nodes

configmap jwt token 错误

host160加入证书

1 2 3 ssh root@192.168.2.160 "mkdir -p /etc/kubernetes/pki" scp -r /etc/kubernetes/pki/* root@192.168.2.160:/etc/kubernetes/pki

加入

1 2 3 4 kubeadm join 192.168.2.158:6443 --node-name master-160 --token fk3wpg.gs0mcv4twx3tz2mc \ --discovery-token-ca-cert-hash sha256:533e488affe662b66310ed8a353b1fba17c354e4c0df5285b95a01a33e986219 \ --control-plane --v=5

配置和校验

1 2 3 4 mkdir -p $HOME /.kubesudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/config sudo chown $(id -u):$(id -g) $HOME /.kube/config kubectl get nodes

host161加入1 2 3 kubeadm join 192.168.2.158:6443 --node-name node-161 --token fk3wpg.gs0mcv4twx3tz2mc \ --discovery-token-ca-cert-hash sha256:533e488affe662b66310ed8a353b1fba17c354e4c0df5285b95a01a33e986219 --v=5

--experimental-control-plane 作为控制节点加入

在控制节点 host158 上执行:

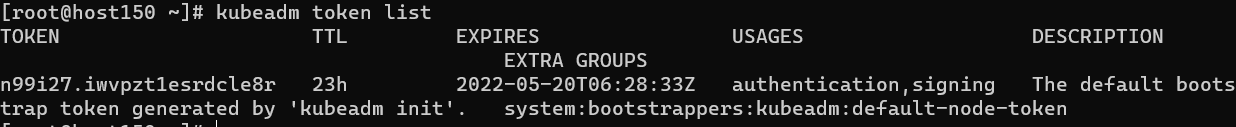

token获取令牌:

创建新令牌

输出类似于以下内容:

直接创建加入脚本

1 kubeadm token create --print-join-command --ttl=240h

如果你没有 --discovery-token-ca-cert-hash 的值,则可以通过在控制平面节点上执行以下命令链来获取它:

1 2 3 openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \ openssl dgst -sha256 -hex | sed 's/^.* //'

输出类似于以下内容:

1 5094a16f108636a64edc65194ef8f61b446f831ebab3265dda5723a394030ee1

查看证书 1 2 3 4 kubeadm alpha certs check-expiration kubeadm alpha certs renew all

init 时的几个配置 1 2 3 4 5 6 7 8 /etc/kubernetes /etc/kubernetes/kubelet.conf /var/lib/kubelet/kubeadm-flags.env /var/lib/kubelet/config.yaml /etc/kubernetes/manifests

五、安装pods网络插件

Flannel: 最成熟、最简单的选择(当前选择)Calico: 性能好、灵活性最强,目前的企业级主流Canal: 将Flannel提供的网络层与Calico的网络策略功能集成在一起。Weave: 独有的功能,是对整个网络的简单加密,会增加网络开销Kube-router: kube-router采用lvs实现svc网络,采用bgp实现pod网络.CNI-Genie:CNI-Genie 是一个可以让k8s使用多个cni网络插件的组件,暂时不支持隔离策略

k8s的容器虚拟化网络方案大体分为两种: 基于隧道方案和基于路由方案

隧道方案:flannel的 vxlan模式、calico的ipip模式都是隧道模式。

路由方案:flannel的host-gw模式,calico的bgp模式都是路由方案

calico 安装(推荐)k8s安装pods网络插件calico(etcd+tls)

Containerd 镜像配置

calic0oGitHub

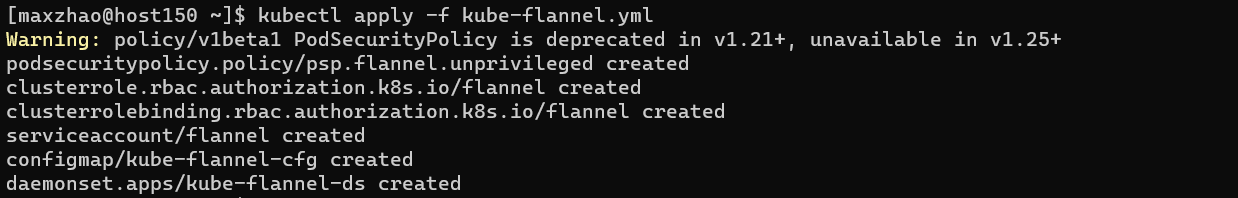

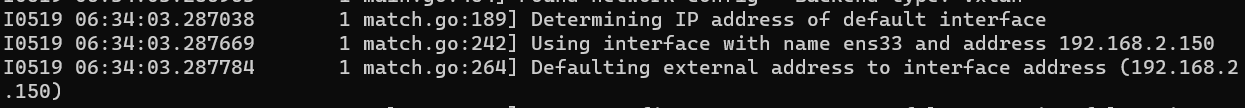

Fiannel安装在 master上执行

1 2 3 4 5 cd ~wget https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml -O kube-flannel.yml kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

建议 flannel 使用 Kubernetes API 作为其后备存储,这样可以避免为 flannel 部署离散的 etcd 集群。

Flannel GitHub地址

修改 kube-flannel.yml 添加网卡

不添加网卡会报错 Failed to find any valid interface to use: failed to get default interface: protocol not available

错误:Failed to find interface

1 2 Could not find valid interface matching ens33: failed to find IPv4 address for interface ens33 Failed to find interface to use that matches the interfaces and/or regexes provided

进入容器

1 2 kubectl -n kube-system exec -it kube-flannel-ds-f92wg sh kubectl -n kube-system exec -it kube-flannel-ds-f92wg bash

修改网段

kubeadm init 时自定义的--pod-network-cidr=10.244.0.0/16 如果有变动,则需要修改 kube-flannel.yml

加载

1 kubectl apply -f kube-flannel.yml

查看容器配置

1 kubectl -n kube-system get ds kube-flannel-ds -o yaml

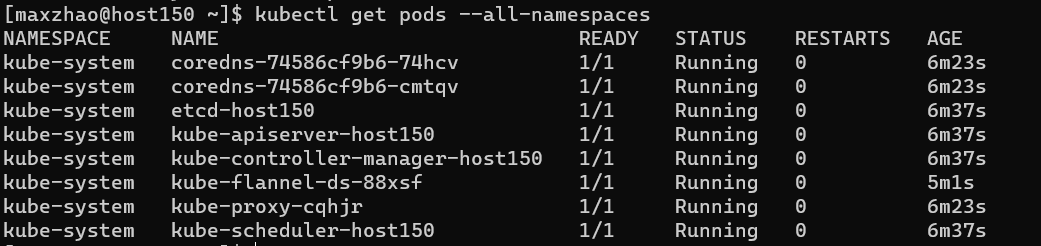

验证 1 2 3 kubectl get pods --all-namespaces kubectl get pods -n kube-system

1 kubectl logs -n kube-system kube-flannel-ds-88xsf -f

kube-proxy ipvs 模式源码分析

使用ipvs负载均衡

获取节点

etcd 集群指南安装etcd集群

校验运行状态 1 2 3 4 5 6 7 8 9 10 ETCDCTL_API=3 /opt/etcd/etcdctl \ --endpoints 192.168.2.158:2379,192.168.2.159:2379,192.168.2.160:2379 \ --cacert /etc/certs/etcd-root-ca.pem \ --cert /etc/certs/etcd-158.pem \ --key /etc/certs/etcd-158-key.pem \ endpoint health ENDPOINTS=192.168.2.158:2379,192.168.2.159:2379,192.168.2.160:2379 ETCD_AUTH='--cacert /etc/certs/etcd-root-ca.pem --cert /etc/certs/etcd-158.pem --key /etc/certs/etcd-158-key.pem ' etcdctl --write-out=table --endpoints=${ENDPOINTS} ${ETCD_AUTH} --user=root:1 endpoint status

要动 Kubernetes API 服务参数 1 2 3 4 5 6 --etcd-servers=192.168.2.158:2379,192.168.2.159:2379,192.168.2.160:2379 --etcd-certfile=/etc/certs/etcd-158.pem --etcd-keyfile=/etc/certs/etcd-158-key.pem --etcd-cafile=/etc/certs/etcd-root-ca.pem

配置kubelet 由于 etcd 是首先创建的,因此你必须通过创建具有更高优先级的新文件来覆盖 kubeadm 提供的 kubelet 单元文件。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 sudo cat /etc/systemd/system/multi-user.target.wants/kubelet.service sudo mkdir /etc/systemd/system/kubelet.service.d/ sudo cat << EOF > /etc/systemd/system/kubelet.service.d/20-etcd-service-manager.conf [Service] ExecStart= # 将下面的 "systemd" 替换为你的容器运行时所使用的 cgroup 驱动。 # kubelet 的默认值为 "cgroupfs"。 # 如果需要的话,将 "--container-runtime-endpoint " 的值替换为一个不同的容器运行时。 ExecStart=/usr/bin/kubelet --address=127.0.0.1 --pod-manifest-path=/etc/kubernetes/manifests --cgroup-driver=systemd Restart=always EOF sudo systemctl daemon-reload sudo systemctl restart kubelet sudo systemctl status kubelet journalctl -xeu kubelet cat /etc/kubernetes/manifests/etcd.yamlcat /etc/kubernetes/kubelet.confcat /var/lib/kubelet/config.yamlcat /etc/kubernetes/bootstrap-kubelet.conf

https://kubernetes.io/zh/docs/setup/best-practices/certificates/ )

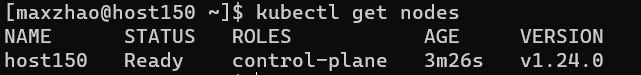

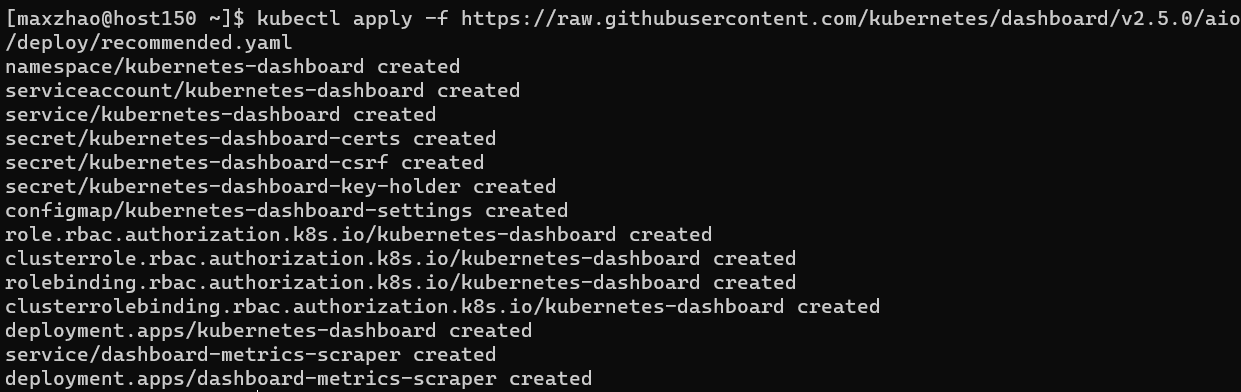

安装dashboard 1 2 3 cd ~wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.0/aio/deploy/recommended.yaml -O recommended.yaml --no-check-certificate kubectl apply -f recommended.yaml

1 2 3 kubectl get pods --all-namespaces kubectl describe pod -n kubernetes-dashboard kubernetes-dashboard-6cdd697d84-9nv4w kubectl logs -f -n kubernetes-dashboard kubernetes-dashboard-6cdd697d84-9nv4w

启用 Dashboard 访问

http://192.168.2.150/:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

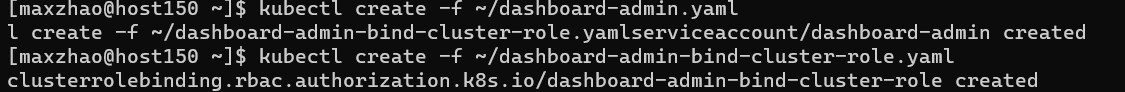

创建管理员 1 vim ~/dashboard-admin.yaml

写入

1 2 3 4 5 6 7 8 apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: dashboard-admin namespace: kubernetes-dashboard

为用户分配权限

1 vim ~/dashboard-admin-bind-cluster-role.yaml

写入

1 2 3 4 5 6 7 8 9 10 11 12 13 14 apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: dashboard-admin-bind-cluster-role labels: k8s-app: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: dashboard-admin namespace: kubernetes-dashboard

分配权限

1 2 kubectl create -f ~/dashboard-admin.yaml kubectl create -f ~/dashboard-admin-bind-cluster-role.yaml

查看并复制Token

1 kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep dashboard-admin | awk '{print $1}' )

访问,用刚刚的token登录

https://192.168.2.150:30000

部署应用 在Kubernetes集群中部署一个Nginx

kubectl create deployment nginx –image=nginx

kubectl expose deployment nginx –port=80 –type=NodePort

kubectl get pod,svc

访问地址:http://NodeIP:Port

在Kubernetes集群中部署一个Tomcat

kubectl create deployment tomcat –image=tomcat

kubectl expose deployment tomcat –port=8080 –type=NodePort

访问地址:http://NodeIP:Port

K8s部署微服务(springboot

1、项目打包(jar、war)–>可以采用一些工具git、maven、jenkins

2、制作Dockerfile文件,生成镜像;

3、kubectl create deployment nginx –image= 你的镜像

4、你的springboot就部署好了,是以docker容器的方式运行在pod里面的;

卸载集群 1 2 3 4 5 6 7 8 9 10 kubectl config delete-cluster k8s-cluster echo "y" | kubeadm resetrm -rf /etc/kubernetes/rm -rf $HOME /.kube/config

删除 calico

1 2 3 4 rm -rf /etc/cni/net.d/rm -rf /var/log/calico/

删除节点 使用适当的凭证与控制平面节点通信,运行:

1 kubectl drain <node name> --delete-emptydir-data --force --ignore-daemonsets

在删除节点之前,请重置 kubeadm 安装的状态:

1 echo "y" | kubeadm reset

重置过程不会重置或清除 iptables 规则或 IPVS 表。如果你希望重置 iptables,则必须手动进行:

1 iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

如果要重置 IPVS 表,则必须运行以下命令:

1 2 ipvsadm -C ipvsadm --clear

现在删除节点:

1 kubectl delete node <node name>

如果你想重新开始,只需运行 kubeadm init 或 kubeadm join 并加上适当的参数。

卸载 Containerd.io 1 2 sudo yum remove -y containerd.io sudo rm -rf /var/lib/containerd

参考 Kubernetes容器运行时弃用Docker转型Containerd

Kubernetes02:容器运行时:Docker or Containerd如何选择、Containerd全面上手实践

附录 CentOS7镜像1 2 3 4 5 6 7 8 mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backupwget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo yum makecache

Docker

虚拟机需要挂载ISO镜像

1 2 3 4 5 6 7 sudo mkdir /mnt/cdrom sudo mount /dev/cdrom /mnt/cdrom/ sudo yum install -y yum-utils sudo yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo

使用阿里云 1 2 3 4 5 6 7 8 sudo yum install -y yum-utils device-mapper-persistent-data lvm2 sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sudo sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo sudo yum makecache fast

Containerd 镜像 1 vim /etc/containerd/config.toml

找到 [plugins."io.containerd.grpc.v1.cri".registry.mirrors]

写入

1 2 [plugins."io.containerd.grpc.v1.cri" .registry.mirrors."docker.io" ] endpoint = ["https://docker.mirrors.ustc.edu.cn" ,"https://registry.cn-hangzhou.aliyuncs.com" ,"https://registry.docker-cn.com" ,"https://aj2rgad5.mirror.aliyuncs.com" ]

Kubelet启动失败问题1 2 journalctl -xefu kubelet journalctl -f -u kubelet

问题处理 pod/kube-proxy CrashLoopBackOff查看 ipvs安装步骤。

错误处理 could not find a JWS signature in the cluster-info ConfigMap1 2 3 4 5 6 7 8 9 10 11 kubectl get configmap cluster-info --namespace=kube-public -o yaml cat /etc/kubernetes/kubelet.confopenssl x509 -pubkey -in /etc/kubernetes/pki/etcd/etcd-ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \ openssl dgst -sha256 -hex | sed 's/^.* //' openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \ openssl dgst -sha256 -hex | sed 's/^.* //'

join 时的 JWS 问题1 The cluster-info ConfigMap does not yet contain a JWS signature for token ID "j2lxkq", will try again

这里 kubeadm token list 可以看到 token 都很正常。

cluster info中的 JWS 需要在kube-controller-manager运行后创建。

1 2 3 4 kubectl describe -n kube-system kube-controller-manager-master-158 kubectl logs -n kube-system kube-controller-manager-master-158 kubectl logs -n kube-system kube-controller-manager-master-158 --v=10

节点NotReady 1 kubectl describe nodes master-160

cni plugin not initialized1 sudo systemctl restart containerd

failed to \"CreatePodSandbox\" for \"coredns1 no such file or directory: check that the calico/node container is running and has mounted /var/lib/calico/\""

是因为calico 没有启动成功

操作 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 kubectl get nodes kubectl get node master-158 kubectl get service kubectl get namespaces kubectl create namespace xxxxxx kubectl delete namespaces xxxxxx kubectl get pods --all-namespaces kubectl get pods -A kubectl exec -it podName -n uat bash kubectl get pod,svc -n kube-system kubectl create -f xxxx.yaml --namespace=xxxx kubectl get pod -n namespace_name xxxxpod -o=yaml kubectl describe pod -n namespace_name xxxxpod kubectl get pod -n kube-system -l k8s-app=flannel -o wide kubectl delete pod -n kube-system 节点名称 kubectl delete pod/节点名称 -n kube-system kubectl describe node master-158 kubectl describe cm -n kube-system kubeadm-config kubectl get cm -n kube-system kubeadm-config -o yaml kubectl describe cm -n kube-system kubelet-config kubectl describe cm -n kube-system kube-proxy kubectl get cm -n kube-system coredns -o yaml kubectl get cm -n kube-system kube-proxy -o yaml kubectl describe cm -n kube-system kubectl edit cm -n kube-system kubeadm-config kubectl edit cm -n kube-system kubelet-config kubectl edit cm -n kube-system kube-proxy kubectl describe ns default kubectl -n kube-system get serviceaccount -l k8s-app=kubernetes-dashboard -o yaml kubectl -n kube-system describe secrets secrets.name kubeadm upgrade node phase kubelet-config --v=10 systemctl restart kubelet kubeadm upgrade node phase control-plane --v=5 kubeadm upgrade node phase preflight --v=5 kubectl cluster-info kubectl -s https://192.168.2.151 get componentstatuses kubectl -s http://192.168.2.151 get componentstatuses kubectl explain pod kubectl explain deployment kubectl explain deployment.spec kubectl explain deployment.spec.replicas kubectl -n kube-system exec -it kube-flannel-ds-f92wg sh kubectl -n kube-system exec -it kube-flannel-ds-f92wg bash

Docker or Containerdkubelet 通过 Container Runtime Interface (CRI) 与容器运行时交互,以管理镜像和容器。

通用的容器运行时:

调用链区别有哪些?

Docker 作为 K8S 容器运行时,调用关系如下:kubelet --> docker shim (在 kubelet 进程中) --> dockerd --> containerd

Containerd 作为 K8S 容器运行时,调用关系如下:kubelet --> cri plugin(在 containerd 进程中) --> containerd

CNI 网络

对比项

Docker

Containerd

谁负责调用 CNI

Kubelet 内部的 docker-shim

Containerd 内置的 cri-plugin(containerd 1.1 以后)

如何配置 CNI

Kubelet 参数 --cni-bin-dir 和 --cni-conf-dir

Containerd 配置文件(toml): [plugins.cri.cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d"

容器日志及相关参数

对比项

Docker

Containerd

存储路径

如果 Docker 作为 K8S 容器运行时,容器日志的落盘将由 docker 来完成,保存在类似/var/lib/docker/containers/$CONTAINERID 目录下。Kubelet 会在 /var/log/pods 和 /var/log/containers 下面建立软链接,指向 /var/lib/docker/containers/$CONTAINERID 该目录下的容器日志文件。

如果 Containerd 作为 K8S 容器运行时, 容器日志的落盘由 Kubelet 来完成,保存至 /var/log/pods/$CONTAINER_NAME 目录下,同时在 /var/log/containers 目录下创建软链接,指向日志文件。

配置参数

在 docker 配置文件中指定: "log-driver": "json-file", "log-opts": {"max-size": "100m","max-file": "5"}

方法一:在 kubelet 参数中指定: --container-log-max-files=5--container-log-max-size="100Mi" 方法二:在 KubeletConfiguration 中指定: "containerLogMaxSize": "100Mi", "containerLogMaxFiles": 5,

把容器日志保存到数据盘

把数据盘挂载到 “data-root”(缺省是 /var/lib/docker)即可。

创建一个软链接 /var/log/pods 指向数据盘挂载点下的某个目录。 在 TKE 中选择“将容器和镜像存储在数据盘”,会自动创建软链接 /var/log/pods。

容器运行时的选择:

Docker与 Containerd常用命令

镜像相关功能

Docker

Containerd

显示本地镜像列表

docker images

crictl images

下载镜像

docker pull

crictl pull

上传镜像

docker push

无

删除本地镜像

docker rmi

crictl rmi

查看镜像详情

docker inspect IMAGE-ID

crictl inspect IMAGE-ID

容器相关功能

Docker

Containerd

显示容器列表

docker ps

crictl ps

创建容器

docker create

crictl create

启动容器

docker start

crictl start

停止容器

docker stop

crictl stop

删除容器

docker rm

crictl rm

查看容器详情

docker inspect

crictl inspect

attach

docker attach

crictl attach

exec

docker exec

crictl exec

logs

docker logs

crictl logs

stats

docker stats

crictl stats

POD 相关功能

Docker

Containerd

显示 POD 列表

无

crictl pods

查看 POD 详情

无

crictl inspectp

运行 POD

无

crictl runp

停止 POD

无

crictl stopp

本文地址: https://github.com/maxzhao-it/blog/post/cdb1e23a/